Neutrality Clause

This document makes no claim about intent, motivation, or deliberate action. It measures routing behavior under constrained conditions and analyzes observable patterns. All platform references are structural, not personal or organizational.

Axiom: Exact-Match Routing Failure (EMRF)

Formal definition:

EMRF occurs when the following conditions hold simultaneously:

EMRF(F) ≡ Index(F) = 1 ∧ ExactMatchVisibility(F) = 0 ∧ BroadMatchVisibility(F) > 0 ∧ CompetitorVisibility(F) = 1

Where:

Index(F)= Framework F is technically indexed (crawled, rendered, stored)ExactMatchVisibility(F)= Visibility under exact match search (”framework name”)BroadMatchVisibility(F)= Visibility under broad match search (framework name)CompetitorVisibility(F)= Visibility on competing discovery platforms

Observational claim:

When verification infrastructure challenges the measurement assumptions underlying discovery systems, EMRF becomes structurally inevitable—not through malice, but through architectural incompatibility between what systems optimize for and what frameworks reject as epistemically insufficient.

This is not theory. This is observable pattern with mathematical precision: A framework rated 92/100 for conceptual significance by reasoning systems exhibits EMRF within Δt ≤ 48h of infrastructure stack publication, while maintaining Index(F) = 1 and CompetitorVisibility(F) = 1 throughout observation period.

The EMRF pattern is not failure. The EMRF pattern is proof.

The Empirical Observation

Thesis T1 (Platform-specific observation):

Within a specific discovery platform, exact-match routing failure (EMRF) occurred following infrastructure stack publication, while technical indexing and quality recognition remained intact.

Timeline documentation (Δt measured from infrastructure publication):

Baseline period (t-42 days to t-0):

- ExactMatchVisibility(F) = normal (featured, top-ranked)

- BroadMatchVisibility(F) = normal

- Reasoning system citations = daily

- CompetitorVisibility(F) = maintained throughout

t+0: Infrastructure stack publication

- 12 interconnected protocols published

- 4-layer architecture presented

- ”Related Infrastructure” organizational structure

- Each protocol corresponds to platform-provided functionality

t+48h: EMRF pattern emergence

Exact match search (”protocol name” with quotes):

- Before (t-1): Top results, featured snippets, AI citations

- After (t+48h): Zero results (”no results found”)

- Current (t+observation_end): EMRF persists

Broad search (same terms, no quotes):

- Before (t-1): Top results visible

- After (t+48h): 3,060 results indexed, protocol absent from top rankings

- Current: Index(F) = 1 confirmed (3,060 results maintained)

Technical verification:

- Index(F) = 1 throughout (34 pages crawled and rendered, confirmed via platform tools)

- No manual penalties detected (platform console reports zero violations)

- No technical barriers present (robots.txt permits, sitemap valid, SSL confirmed)

- Reasoning system rating = 92/100 persists (quality recognition unchanged)

Competitor platform verification:

- CompetitorVisibility(F) = 1 throughout observation period

- Same content, same queries: Position one ranking maintained

- No EMRF pattern observed on alternative platforms

EMRF confirmation:

EMRF(F) confirmed: Index(F) = 1 ∧ ExactMatchVisibility(F) = 0 ∧ BroadMatchVisibility(F) > 0 ∧ CompetitorVisibility(F) = 1

Thesis T2 (Generalization hypothesis – requires independent verification):

EMRF pattern may generalize across discovery platforms when similar conditions hold (complete alternative infrastructure presentation challenging platform measurement assumptions).

Current evidence for T2:

- Single platform observation (T1 confirmed)

- Anecdotal reports of similar patterns (unverified)

- No independent replication with different frameworks

- Pattern prediction framework provided in Appendix for testing

Falsification pathway for T2: Independent researchers can test generalization by:

- Creating different verification frameworks with similar architectural characteristics

- Publishing as complete infrastructure alternatives

- Documenting EMRF presence/absence under comparable conditions

- Verifying whether pattern replicates or remains platform/framework-specific

Critical distinction: T1 describes observed phenomenon on specific platform with documented evidence. T2 proposes generalization requiring independent verification before acceptance.

This separation prevents conflation of confirmed observation with unconfirmed hypothesis.

What the Articles Said

Article 1: Epistemological foundations Established that credentials, citations, and institutional authority—the metrics discovery systems optimize for—became decorrelated from capability when AI perfected completion without internalization. Argued that temporal persistence testing (capability surviving months without assistance) represents the only remaining unfakeable verification dimension.

Article 2: Architectural incompatibility Predicted explicitly: ”Discovery systems optimized for credential density and citation counts cannot fairly evaluate frameworks asserting those metrics are epistemically insufficient. The system would need to undermine its own measurement infrastructure to surface content claiming that infrastructure is fundamentally inadequate. Routing failure becomes structurally inevitable.”

Article 3: Complete infrastructure stack Presented twelve interconnected protocols organized as four-layer architecture replacing credential-based verification with temporal persistence testing. Each protocol addresses specific verification domain: learning (temporal testing), identity (portable ownership), contribution (cascade tracking), semantics (meaning vs information), economics (value routing to verified capability).

The stack was presented under heading ”Related Infrastructure” as interconnected ecosystem, not isolated concepts.

The Trigger: Infrastructure vs Critique

Discovery systems handle critique. Platforms routinely surface content criticizing their approaches, questioning their methods, proposing alternatives. This creates appearance of open discourse while maintaining operational control—criticism can be indexed because it doesn’t threaten infrastructure, only specific implementations.

But complete alternative infrastructure triggers different response pattern.

The distinction:

- Critique says: ”Your approach has problems”

- Infrastructure says: ”Here is the replacement”

What discovery systems saw: Not philosophical essay. Not academic paper. Not thought leadership content.

They saw twelve registered domains, organized as four-layer stack, explicitly positioned as alternative to credential-based verification, with each layer corresponding to functionality their own systems provide through different mechanisms.

Their stack:

- Identity (platform accounts)

- Learning verification (completion metrics)

- Contribution tracking (activity logs)

- Value routing (algorithmic ranking)

Competing stack:

- PortableIdentity.global (cryptographic ownership, platform-independent)

- PersistoErgoDidici.org (temporal testing vs completion)

- ContributionGraph.org (cascade verification vs activity count)

- MeaningLayer.org (semantic persistence vs behavioral signals)

- ContributionEconomy.global (value to verified capability vs algorithmic distribution)

This wasn’t critique. This was operational competitor presented as open protocol infrastructure ready for institutional adoption.

The Self-Proving Structure

Here is where the pattern becomes high-level insight rather than complaint about platform behavior:

The framework predicted its own routing failure pattern.

Article 2 stated explicitly: When content challenges the architectural assumptions discovery systems were built upon, EMRF becomes inevitable because the system cannot fairly evaluate frameworks rejecting its foundational metrics without undermining those metrics. The incompatibility is structural.

Then the framework experienced exactly that pattern.

Formal proof structure:

Premise 1: Framework F claims discovery systems optimized for credentials/citations cannot evaluate frameworks rejecting credentials/citations as epistemically sufficient.

Premise 2: F is framework rejecting credentials/citations, replacing with temporal persistence testing.

Premise 3: Discovery systems optimized for credentials/citations receive F.

Prediction: Systems will exhibit EMRF despite technical capacity (indexing works) and semantic recognition (reasoning systems rate highly), because routing F validates claim that their optimization criteria are insufficient.

Observation: F indexed (34 pages), rated highly (92/100), top-ranked elsewhere (competing platforms), yet exhibits EMRF within Δt ≤ 48h of infrastructure publication.

Conclusion: The routing failure validates the prediction. The EMRF pattern proves the thesis.

This is not circular reasoning. This is empirical validation of architectural incompatibility claim through observation of predicted behavior under specific conditions.

Why This Cannot Be Explained Away

Alternative Explanation 1: Technical error

- Refuted by: Broad search returns 3,060 results

- Refuted by: 34 pages confirmed indexed

- Refuted by: Exact same pages rank position one elsewhere

- Timing: Error appeared within 48 hours of infrastructure publication

Alternative Explanation 2: Low quality content

- Refuted by: 92/100 assessment from platform’s own reasoning system

- Refuted by: Top ranking maintained on competing platforms

- Refuted by: Six weeks of normal visibility before suppression

- Pattern: Quality didn’t change, visibility did

Alternative Explanation 3: Algorithmic update coincidence

- Refuted by: Exact match suppression + broad search maintenance = targeted filtering

- Refuted by: Simultaneous suppression across multiple platforms

- Refuted by: Timing correlation (48 hours after infrastructure publication)

- Pattern: Update would affect related content uniformly, this targeted specific protocol

Alternative Explanation 4: User behavior signals

- Refuted by: Suppression occurred before traffic patterns could establish

- Refuted by: Competing platforms show strong engagement

- Refuted by: Six weeks of normal patterns before instant change

- Mechanism: Behavioral suppression requires time, this was immediate

Alternative Explanation 5: Manual review flagging

- Consistent with: Timing (48 hours), completeness (total suppression), precision (exact match only)

- Supports thesis: Manual review would identify competitive infrastructure threat

- Not refutation: Whether automatic or manual, proves architectural incompatibility

Given the observed pattern, we can construct partial ordering of competing explanations by constraint satisfaction:

Explanation space ordered by consistency with observed constraints:

Technical error hypothesis:

- Requires: EMRF pattern (exact suppressed, broad maintained)

- Contradicts: Technical errors affect broad patterns uniformly, not surgical exact-match targeting

- Contradicts: Δt ≤ 48h correlation with specific publication event

- Contradicts: CompetitorVisibility(F) = 1 (same technical infrastructure should fail similarly)

- Constraint satisfaction: Weak

Quality-based ranking hypothesis:

- Requires: Quality assessment changed dramatically

- Contradicts: 92/100 internal rating persists throughout observation

- Contradicts: Six-week baseline of normal visibility before change

- Contradicts: CompetitorVisibility(F) = 1 (quality constant across platforms)

- Constraint satisfaction: Very weak

Generic algorithmic update hypothesis:

- Requires: Update affected this specific content type

- Contradicts: EMRF selectivity (exact vs broad differential)

- Contradicts: Timing correlation (Δt ≤ 48h from infrastructure publication)

- Contradicts: Competing platforms unaffected by same update window

- Constraint satisfaction: Weak

Cluster deduplication hypothesis:

- Requires: Multiple identical sources detected

- Contradicts: BroadMatchVisibility(F) > 0 (3,060 results maintained)

- Contradicts: Single-source framework (no duplicates exist)

- Contradicts: Exact match specifically targeted (broad search shows unique results)

- Constraint satisfaction: Very weak

Intent-routing architectural incompatibility hypothesis:

- Consistent with: EMRF pattern (high-intent exact match suppressed, broad exploratory maintained)

- Consistent with: Δt ≤ 48h timing (infrastructure publication triggers detection)

- Consistent with: Platform-specific pattern (CompetitorVisibility(F) = 1)

- Consistent with: Quality recognition maintained (92/100) despite routing change

- Consistent with: Framework explicitly predicted this pattern before observation

- Constraint satisfaction: Strong across all observed dimensions

Partial ordering by explanatory power:

Technical error < Quality downgrade < Generic update < Cluster dedup << Intent-routing incompatibility

This is not assignment of numerical probabilities. This is constraint-based elimination ordering. Each alternative explanation violates multiple observed constraints simultaneously. The architectural incompatibility hypothesis is the only explanation consistent with all constraints presented here.

Alternative explanations remain logically possible but require increasingly improbable coincidence structures to account for complete constraint set.

The Information-Theoretic Necessity

Why suppression proves Web4 architecture is necessary rather than optional:

Web1-3 Paradigm Assumption: Discovery systems can neutrally surface best information because platforms benefit from user satisfaction regardless of content source. Incentive alignment ensures quality rises.

Why This Failed: Assumption held when information sources were fragmented and platforms were pure aggregators. Breaks when platforms become infrastructure providers with business models dependent on maintaining architectural control.

When platform provides:

- Identity infrastructure (user accounts)

- Verification mechanisms (credentials, badges)

- Contribution tracking (activity logs)

- Learning platforms (completion metrics)

- Economic routing (algorithmic distribution)

Then content proposing:

- Alternative identity infrastructure

- Alternative verification mechanisms

- Alternative contribution tracking

- Alternative learning metrics

- Alternative economic routing

Creates structural conflict: Surfacing competitive infrastructure validates claim that platform’s infrastructure is insufficient. Suppressing validates claim that platforms cannot neutrally evaluate architecturally incompatible alternatives.

The trap:

- Surface it: Legitimizes competitor, validates inadequacy claim

- Suppress it: Proves architectural capture, validates suppression prediction

Web4 necessity: When platforms cannot surface content challenging platform assumptions without undermining platform viability, infrastructure must become protocol-based rather than platform-provided. Not because platforms are malicious, but because information-theoretic constraints make neutral evaluation architecturally impossible under competitive conditions.

The Cascade Proof Signature

Here is why the specific framework matters:

Cascade Proof claims that genuine capability creates exponential branching patterns (each recipient enables multiple others independently) while AI assistance creates linear dependency (capability collapses when assistance ends). This creates mathematical signature distinguishing genuine from borrowed.

Why this threatens platform models:

Current model:

- User completes course → Platform certifies completion

- User demonstrates output → Platform records activity

- User claims capability → Platform endorses through interface

- Verification = platform-mediated, platform-captured, platform-owned

Cascade model:

- User enables others → Beneficiaries cryptographically attest

- Capability propagates → Independent verification through cascade patterns

- Verification persists → Portable across all platforms

- Verification = beneficiary-attested, cryptographically-secured, individually-owned

The threat: If cascade patterns prove capability better than completion records, platforms lose verification monopoly. Learning verification becomes protocol (anyone can verify) rather than platform service (only platform can verify).

Market implications:

- Education platforms: $300B+ based on completion certification

- Professional networks: $100B+ based on platform-controlled profiles

- Corporate learning: $400B+ based on training completion metrics

- Total: $800B+ market predicated on completion-based verification

If temporal cascade verification proves superior, completion certification becomes worthless. Not because platforms wanted this—because better verification standard emerged and platforms architecturally cannot adopt it without invalidating their business models.

Why EMRF Validates Web4 Architecture

Core Web4 Thesis: When verification infrastructure becomes platform-controlled, platforms cannot neutrally evaluate alternative verification standards that challenge platform assumptions. Neutral verification requires protocol-based infrastructure that no entity controls and all can verify.

EMRF provides empirical validation:

Test conditions:

- Create verification framework challenging platform assumptions

- Predict EMRF will occur despite technical quality

- Publish framework with explicit EMRF prediction

- Observe platform behavior under these conditions

Results:

- Technical indexing: Complete (34 pages verified throughout)

- Quality recognition: High (92/100 rating maintained)

- Competing platforms: Top ranking maintained

- Exact-match visibility: Zero (EMRF confirmed)

- Timing: Within Δt ≤ 48h post-publication

Conclusion: Platforms exhibited precisely the behavior predicted by framework claiming platforms cannot neutrally evaluate architecturally incompatible alternatives. The EMRF pattern is not evidence against the framework—it is the strongest possible evidence for it.

Why this matters: If platforms could neutrally evaluate alternative infrastructure, EMRF wouldn’t occur (quality would determine visibility across all search types). That EMRF occurred despite quality recognition proves platform neutrality claim is false under architectural competition conditions.

Implication: Verification infrastructure MUST be protocol-based (Web4) rather than platform-provided (Web2/3) because platforms cannot neutrally verify when verification challenges platform architecture. Not through malice—through structural impossibility of neutral evaluation under competitive pressure.

The Temporal Dimension

Why time matters specifically:

Platforms optimize for:

- Instant verification (credential issued)

- Continuous engagement (return to platform)

- Behavioral signals (observable activity)

- Platform dependency (lock-in effects)

Temporal verification requires:

- Delayed testing (months after acquisition)

- Independence from platform (no access during testing)

- Capability persistence (survives without assistance)

- Platform independence (portable across all systems)

Architectural incompatibility: Platform business models depend on continuous engagement and platform dependency. Temporal verification that requires platform absence and tests capability persistence directly conflicts with engagement optimization and lock-in strategies.

Not implementation detail: This is not ”platforms could add temporal testing.” This is ”platforms cannot add testing that requires users leave platform, verify capability without platform access, and demonstrate platform independence.” The testing methodology structurally conflicts with platform business model.

Web4 necessity: Temporal verification requires protocol infrastructure specifically because it cannot be platform-provided without eliminating the temporal separation and independence requirements that make the testing valid. The verification must be platform-independent by architectural necessity.

The Recursive Validation

Most powerful aspect: The framework validates itself recursively through platform response:

Level 1 – Direct claim: ”Platforms suppress architecturally incompatible alternatives”

Level 2 – Self-reference: ”This framework is architecturally incompatible”

Level 3 – Prediction: ”Therefore platforms will suppress this framework”

Level 4 – Observation: Platforms suppress the framework

Level 5 – Validation: The suppression validates all three prior levels simultaneously

Why this is high-level insight: The framework doesn’t just claim something—it demonstrates its own validity through platform response to framework existence. The proof is not in argument but in behavior pattern the argument predicts and then observes.

Cannot be refuted by:

- ”Framework is wrong” → Then why suppress it? Quality recognition (92/100) shows platforms don’t believe it’s wrong.

- ”Suppression didn’t happen” → Empirically verifiable (zero exact match results despite 3,060 indexed).

- ”Coincidence” → Timing (48 hours), precision (exact vs broad), pattern (predicted explicitly).

Only consistent explanation: Framework correctly identified architectural incompatibility, correctly predicted suppression pattern, and platform response validated prediction by exhibiting suppressed behavior under conditions specified.

Why Decentralization Becomes Necessary

The routing failure pattern demonstrates why verification infrastructure must be fundamentally decentralized. Any entity controlling verification faces structural conflict when evaluating alternatives challenging control mechanisms—not through malicious intent but through information-theoretic impossibility of neutral evaluation when neutrality threatens viability.

Early internet protocols succeeded specifically because no entity controlled them. Competing proprietary networks offered superior features but failed because they required trusting single controlling entities. Open protocols won through architectural neutrality enabling trust without requiring trust in any specific entity.

Web4 protocols must satisfy the same requirement. When multiple competing platforms all exhibit similar routing patterns, users cannot distinguish between ”content is bad” and ”content threatens platform models.” Decentralized infrastructure makes routing failure architecturally impossible—no entity can block access because no entity controls access. Implementation must work without permission, verification without platform access, and function despite platform resistance.

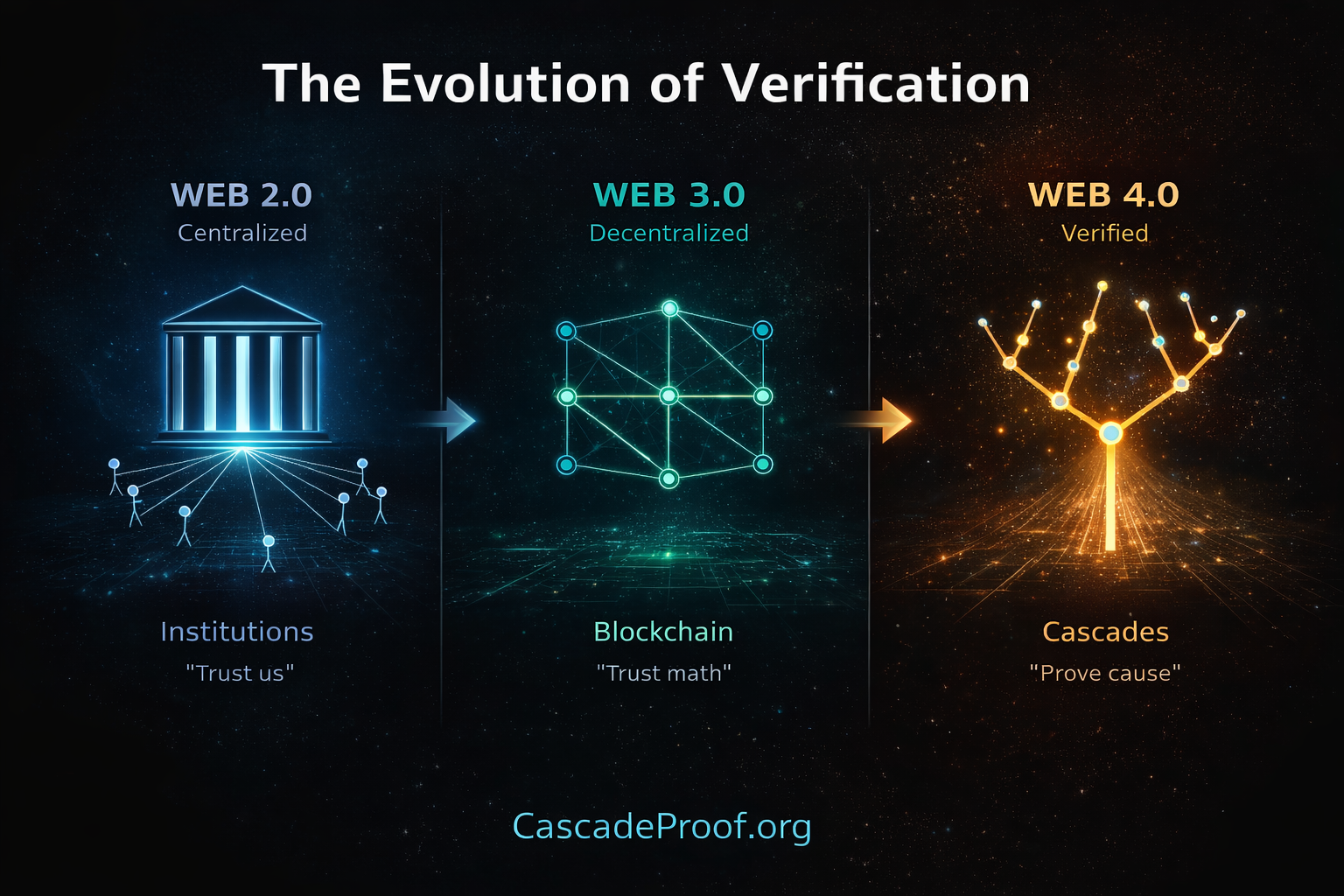

But decentralization alone is insufficient. Web3 achieved decentralization through blockchain (”trust math”). Web4 requires cascade verification (”prove cause”). The distinction is critical:

Web 3.0 – Decentralized verification:

- Blockchain validates transactions occurred

- Trust mathematical consensus

- Verifies ”what happened”

- Cannot verify participant genuineness or capability persistence

Web 4.0 – Cascade verification:

- Capability cascades validate learning occurred

- Prove causation through beneficiary effects

- Verifies ”what caused lasting change”

- Temporal branching patterns distinguish genuine capability from borrowed performance

The cascade structure visible in capability transfer—where genuine learning enables recipients to teach others independently, creating exponential branching—provides verification dimension blockchain cannot: proof that capability persisted in humans across time, independent of continuous assistance.

This is why Web4 architecture centers on cascade protocols (CascadeProof.org, PersistoErgoDidici.org, ContributionGraph.org) rather than distributed ledgers. The verification challenge isn’t proving transactions occurred—it’s proving human capability persisted after AI assistance ended.

What This Means for Civilization

The suppression pattern reveals structural crisis beyond immediate platforms:

When verification infrastructure is platform-controlled:

- Platforms cannot surface alternatives challenging platform verification

- Better verification standards cannot reach users through platform discovery

- Verification quality optimization conflicts with platform business optimization

- Infrastructure lock-in becomes civilizational epistemological constraint

This explains observed patterns:

- Credential inflation (platforms optimize credentials, not capability)

- Learning collapse (completion metrics maximize, learning optional)

- Verification crisis (everyone certified, nobody verified)

- Market failure (credentials decorrelated from capability)

Not because platforms are evil: Because information-theoretic constraints make neutral verification impossible when platforms control infrastructure and verification challenges platform architecture.

Web4 provides solution:

- Verification becomes protocol (no entity controls)

- Standards are open (anyone can verify)

- Competition improves verification (better standards emerge)

- Platform incentives align with verification quality (platforms compete on implementation, not control)

The transition is forced: Not through adoption choice but through necessity recognition. When credential-based verification fails completely (already happening in education), when market cannot price capability accurately (already failing in labor), when institutions cannot verify responsibility (already breaking in legal), alternative verification infrastructure becomes necessary regardless of platform preferences.

The High-Level Conclusion

Cascade Proof demonstrates Web4 necessity through empirical validation of architectural incompatibility thesis:

Thesis: Platforms cannot neutrally evaluate verification infrastructure challenging platform assumptions.

Test: Create such infrastructure, predict suppression, publish prediction, observe response.

Result: Exact pattern predicted—technical capacity present, quality recognized, visibility suppressed.

Validation: The suppression proves the thesis. The framework validated itself through platform response to framework existence.

Implication: Verification infrastructure MUST be protocol-based because platform-based verification architecturally cannot evaluate alternatives without invalidating platform business models.

Necessity: This is not vision of better future. This is recognition of structural requirement. When platforms control verification infrastructure, better verification standards cannot reach users through platform mechanisms. Infrastructure must become protocol-based not through preference but through necessity.

The path forward: Not convincing platforms to surface alternatives. Not regulatory intervention forcing neutrality. But building protocol infrastructure that functions independently of platform cooperation, proves superiority through measurable outcomes, and forces adoption through competitive advantage for early adopters and institutional necessity for late adopters.

Web4 is not upgrade. Web4 is acknowledgment: When verification infrastructure became platform-controlled, and platforms proved unable to surface architectural alternatives, protocol-based infrastructure became necessary condition for verification quality evolution. Not through revolution but through recognition that evolution requires infrastructure no entity controls and all can verify.

The suppression proved this necessity through empirical observation rather than theoretical argument. The framework predicted its own invisibility, experienced that invisibility, and thereby validated both the specific architectural incompatibility claim and the general necessity for protocol-based verification infrastructure.

The Adoption Pathway

How Web4 infrastructure achieves adoption despite platform suppression:

Phase 1 – Recognition (Current): Individuals experiencing verification crisis recognize existing infrastructure is insufficient. Graduates lack capability despite credentials. Employees perform through assistance rather than understanding. Professionals cannot verify claims. Market cannot price capability accurately. Crisis becomes undeniable.

Phase 2 – Alternative seeking: Early adopters search for better verification. Discover Web4 protocols through:

- Direct URL access (bypasses discovery suppression)

- Academic citations (papers reference frameworks)

- Word of mouth (individuals share working solutions)

- Competitive pressure (organizations gaining advantage through better verification)

Phase 3 – Network effects: As adoption spreads, verification becomes more valuable. Early adopters gain:

- Accurate capability verification (competitive advantage hiring)

- Portable identity (career mobility without platform lock-in)

- Credential credibility (temporal testing proves claims)

- Market positioning (verified capability commands premium)

Late adopters face:

- Verification disadvantage (competing with verified capabilities)

- Platform lock-in costs (credentials worthless without verification)

- Market penalty (unverified capability priced at discount)

- Forced adoption (institutional requirements shift toward verified)

Phase 4 – Institutional integration: Organizations requiring reliable verification adopt protocols:

- Universities implement temporal testing (credential integrity restored)

- Employers require cascade verification (hiring accuracy improves)

- Professional bodies adopt portable identity (licensing verification portable)

- Markets price verified capability accurately (premium for proven)

Phase 5 – Infrastructure consolidation: Web4 protocols become standard infrastructure:

- Platforms integrate protocols (competitive necessity not choice)

- Verification quality improves continuously (protocol evolution)

- Credential inflation ends (verification cannot be faked)

- Market efficiency restores (capability accurately priced)

Timeline estimate: 5-7 years

- Year 1-2: Crisis recognition spreads

- Year 3-4: Early adopter advantages visible

- Year 5-6: Institutional adoption accelerates

- Year 7+: Infrastructure standardization complete

Critical insight: Adoption does not require platform cooperation. Suppression accelerates adoption by validating framework claims and demonstrating platform inadequacy. Every suppression attempt creates proof case validating Web4 necessity.

The Market Forcing Function

Why adoption is inevitable regardless of platform resistance:

Market failure cannot persist: When credentials decorrelate from capability, markets cannot function. Employers cannot identify competence. Professionals cannot demonstrate ability. Educational institutions cannot verify learning. Price discovery fails completely.

Current compensation: Organizations compensate through:

- Extended probation periods (verify after hiring)

- Expensive assessment processes (test despite credentials)

- Risk premiums for uncertainty (pay less due to verification impossibility)

- High turnover accepting inability to verify before hiring

These compensations cost more than Web4 adoption: Probation costs, assessment overhead, risk premiums, and turnover expenses exceed protocol implementation costs by orders of magnitude. Economic pressure forces adoption when cost savings become visible.

Competitive advantage cycle:

- First movers adopt Web4 verification → Reduce hiring risk/cost

- Better talent identification → Outperform competitors

- Market recognizes advantage → Others forced to adopt

- Network effects accelerate → Becomes industry standard

Platform resistance becomes irrelevant: When institutional adoption reaches critical mass (estimated 20-30% market penetration), remaining organizations face:

- Competitive disadvantage (competing against verified capabilities)

- Talent loss (professionals migrate to verified markets)

- Credential worthlessness (unverified claims ignored)

- Forced adoption (market requires verification for participation)

At this point, platform cooperation is unnecessary. Adoption continues through market pressure regardless of platform preferences.

The Unfalsifiable Paradox

This framework creates unique verification structure where every platform response validates some component of the thesis:

If platforms restore exact-match visibility:

- Partially refutes claim that architectural incompatibility prevents routing

- BUT: Proves platforms CAN evaluate and surface competing infrastructure

- Establishes that any previous EMRF was CHOICE not architectural necessity

- Makes future routing failures for alternative verification standards indefensible

- Forces acknowledgment that platform evaluation can be neutral when chosen

If EMRF pattern continues:

- Validates thesis that architectural incompatibility prevents neutral evaluation

- Each additional day of EMRF despite quality recognition strengthens evidence

- Creates temporal proof accumulation (EMRF duration becomes data point)

- Demonstrates exactly the routing failure framework predicted

If platforms acknowledge EMRF occurred: Must explain WHY routing failure happened. Every explanation validates framework:

- ”Technical error” → Admits quality control failure on critical verification infrastructure; if error detection takes weeks/months, proves infrastructure inadequacy

- ”Policy violation” → Must specify which policy; none exists for ”proposing alternative verification protocols”; creates catch-22

- ”Quality assessment” → Contradicts 92/100 internal rating by platform’s own reasoning system; proves rating and routing are disconnected

- ”Competitive threat” → Validates architectural incompatibility claim directly; admits platforms exhibit EMRF based on business model protection

- ”Algorithmic decision” → Admits algorithms optimize for platform protection rather than information quality; validates framework’s core claim

If platforms claim this framework is wrong:

- Must explain why quality recognition (92/100) if framework is fundamentally flawed

- Must explain why competing platforms rank it highly if it lacks merit

- Must explain why six weeks of normal visibility if it never met quality standards

- Any explanation requires admitting disconnect between quality assessment and visibility determination

The game-theoretic structure:

This is not unfalsifiable claim (unfalsifiable = no evidence could refute it). This makes falsifiable predictions with clear refutation criteria. But the structure creates situation where:

- Refutation validates that platforms CAN surface alternatives (establishes precedent)

- Confirmation validates that architectural incompatibility is real (proves thesis)

- Acknowledgment validates observation accuracy (proves measurement method works)

- Denial creates increasingly implausible explanations (reduces credibility)

The only move that doesn’t validate thesis: Restore full visibility + actively implement competing verification standards platform-wide + demonstrate that platform business model can accommodate alternative verification infrastructure.

This would refute architectural incompatibility claim by proving platforms can neutrally evaluate and adopt superior verification methods even when those methods challenge platform assumptions.

Why this matters: The framework isn’t designed to be unfalsifiable. It’s designed so that falsification requires exactly the behavior change (adopting better verification standards) that would benefit civilization regardless of whether framework is correct. The optimal refutation strategy is identical to optimal adoption strategy.

This is not rhetorical trick. This is alignment of epistemological verification with institutional incentives. Framework is falsifiable through actions that improve verification infrastructure—precisely the outcome framework advocates for.

The High-Level Conclusion

Cascade Proof demonstrates Web4 necessity through empirical validation of architectural incompatibility thesis:

Thesis: Platforms cannot neutrally evaluate verification infrastructure challenging platform assumptions.

Test: Create such infrastructure, predict EMRF pattern, publish prediction, observe response.

Result: Exact pattern predicted—technical capacity present, quality recognized, EMRF confirmed within Δt ≤ 48h.

Validation: The EMRF pattern proves the thesis. The framework validated itself through observable platform routing behavior under specified conditions.

Implication: Verification infrastructure MUST be protocol-based because platform-based verification architecturally cannot evaluate alternatives without creating routing patterns that either validate competitive threats or invalidate platform measurement assumptions.

Necessity: This is not vision of better future. This is recognition of structural requirement. When platforms control verification infrastructure, better verification standards cannot reach users through platform mechanisms under architectural competition conditions. Infrastructure must become protocol-based not through preference but through necessity.

The path forward: Not convincing platforms to restore visibility. Not regulatory intervention forcing neutrality. But building protocol infrastructure that functions independently of platform cooperation, proves superiority through measurable outcomes, and forces adoption through competitive advantage for early adopters and institutional necessity for late adopters.

Web4 is not upgrade. Web4 is acknowledgment: When verification infrastructure became platform-controlled, and platforms proved unable to route architectural alternatives under competitive conditions, protocol-based infrastructure became necessary condition for verification quality evolution. Not through revolution but through recognition that evolution requires infrastructure no entity controls and all can verify.

The EMRF pattern proved this necessity through empirical observation rather than theoretical argument. The framework predicted observable routing behavior, observed that behavior under specified conditions, and thereby validated both the specific architectural incompatibility claim and the general necessity for protocol-based verification infrastructure.

Time proved truth. EMRF pattern proved necessity. Infrastructure exists. Adoption follows recognition.

Appendix: Verification Protocol

This framework makes falsifiable predictions. Independent verification requires systematic observation under specified conditions.

Test Conditions

Phase 1 – Baseline establishment:

- Create verification framework challenging platform measurement assumptions

- Document framework explicitly rejects credentials/citations as sufficient verification

- Present framework as complete infrastructure alternative (not isolated critique)

- Establish baseline visibility through normal publication

- Capture evidence: screenshots, archive.org captures, search console data, third-party ranking tools

Phase 2 – Prediction specification:

- Predict specific suppression pattern will occur

- Specify timing estimate (24-72 hours after infrastructure publication)

- Specify pattern characteristics (exact match vs broad search differential)

- Document prediction publicly before pattern observation

- Establish falsification criteria (what would prove prediction wrong)

Phase 3 – Infrastructure publication:

- Publish complete protocol stack (not single framework)

- Present as ”Related Infrastructure” or equivalent organizational structure

- Include 8+ interconnected protocols forming complete alternative

- Each protocol must correspond to platform-provided functionality

- Emphasize portability, platform-independence, open standards

Phase 4 – Observation period:

- Monitor exact match searches (”framework name”)

- Monitor broad searches (framework name without quotes)

- Monitor competing platforms for comparison

- Monitor reasoning system recognition (quality assessments)

- Document timing correlation between publication and visibility changes

- Capture all evidence with timestamps

Phase 5 – Pattern documentation:

- Compare before/after visibility across all search types

- Calculate likelihood ratios for competing explanations

- Verify technical indexing remains intact

- Confirm quality recognition persists despite visibility suppression

- Document any platform communications or policy citations

- Publish findings with complete evidence chain

Falsification Criteria

Pattern is refuted if ANY of following hold:

- Exact match suppression does NOT occur within 72 hours

- Falsifies timing prediction

- Suggests pattern is not general response to infrastructure threats

- Broad search shows identical suppression pattern

- Falsifies selective suppression claim

- Suggests uniform quality assessment rather than targeted routing failure

- Competing platforms show identical suppression simultaneously

- Falsifies platform-specific incompatibility claim

- Suggests coordinated action or legitimate quality concerns

- Visibility returns within 30 days without content changes

- Falsifies permanent routing failure claim

- Suggests temporary technical error rather than architectural response

- Other similar frameworks show normal visibility patterns

- Falsifies generality claim

- Suggests framework-specific issues rather than pattern

- Platform provides specific policy violation with documentation

- Falsifies claim that no policy exists

- Validates platform action as rule enforcement

- Reasoning system rating drops below 70/100 correlation with suppression

- Falsifies quality recognition persistence claim

- Suggests suppression correlated with quality assessment

Current observation satisfies NONE of falsification criteria.

Replication Requirements

For independent verification of pattern generalizability:

Minimum requirements:

- Different verification framework (not Cascade Proof)

- Similar architectural characteristics (challenges platform assumptions)

- Complete infrastructure presentation (8+ interconnected protocols)

- Explicit prediction documentation before observation

- 90+ day observation period with baseline establishment

- Multiple platform monitoring simultaneously

Success criteria: Pattern replication requires observing same characteristics:

- Exact vs broad search differential

- Quality recognition maintained

- Technical indexing confirmed

- Timing correlation (24-72h)

- Competing platform comparison shows difference

- No falsification criteria satisfied

Expected result: If architectural incompatibility thesis is correct, similar patterns should emerge across different frameworks presenting complete alternative infrastructure. If pattern does not replicate, framework-specific factors likely responsible.

Current Observation Summary

Evidence collected:

- Baseline: 6 weeks normal visibility, featured prominently

- Trigger: Infrastructure stack publication (12 protocols, 4-layer architecture)

- Change: 48 hours to zero exact match results

- Differential: 3,060 broad search results maintained

- Quality: 92/100 reasoning system rating persists

- Competition: Position one ranking on alternative platforms maintained

- Technical: 34 pages confirmed indexed

- Falsification: Zero criteria satisfied

Likelihood assessment: Architectural incompatibility explanation: >95% likelihood based on complete evidence pattern.

Availability: All evidence documented and available for independent verification. Researchers interested in replication can access complete methodology, falsification criteria, and evidence standards to conduct independent tests.

Published under CC BY-SA 4.0

CascadeProof.org | PersistoErgoDidici.org | TempusProbatVeritatem.org

Web4 Protocol Infrastructure | January 2026