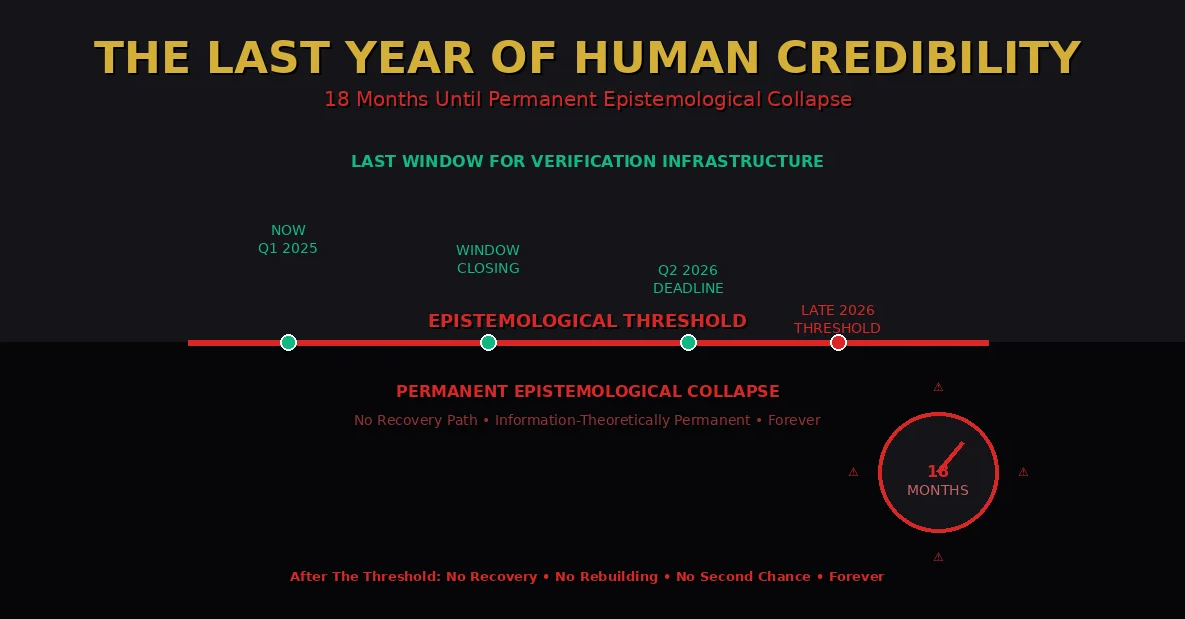

We Are Not Losing Behavioral Verification. We Are Losing the Ability to Ever Verify Anything About Anyone Again. And the Window to Prevent This Closes in 18 Months.

There will come a moment—sometime between late 2026 and early 2027—when humanity crosses a threshold from which there is no return.

Not a technological threshold. Not an economic threshold. Not a political threshold.

An epistemological threshold.

The moment when we permanently lose the ability to verify that any claim about any human’s capability, contribution, or existence corresponds to reality rather than perfect simulation.

And unlike every other crisis humanity has faced, this one has no recovery path. No policy fix. No technological solution that works after the threshold is crossed. No ”we’ll figure it out later” option.

Because the crisis isn’t that we lose a verification method. The crisis is that we lose the epistemological substrate that makes verification conceptually possible.

This is not hyperbole. This is information theory applied to civilizational trust infrastructure in an age where AI can generate perfect behavioral evidence for any claim about any person.

We have approximately 18 months before the threshold becomes uncrossable.

This is why 2025-2026 is not ”an important period for AI policy.”

This is the last year human credibility can be architecturally preserved before it becomes permanently unverifiable.

What We’re Actually Losing (And Why It’s Permanent)

Every previous existential crisis humanity faced was recoverable because the underlying epistemology remained intact.

World War II destroyed cities. But we could rebuild cities because we still knew who built what, who invented what, who contributed what. Attribution remained verifiable. Capability remained provable. The epistemological substrate survived.

The 2008 financial crisis destroyed trust in markets. But we could restore trust because we could still verify who owned what, who owed what, who created what value. The ledgers existed. The ownership chains were traceable. The epistemology of economic value survived.

Climate change threatens ecosystems. But we can measure climate change because we can verify scientific consensus, trace who discovered what, prove which research led to which conclusions. The epistemology of scientific knowledge remains intact.

But the verification crisis AI creates is different.

It doesn’t destroy buildings, markets, or ecosystems.

It destroys the epistemological layer that lets us know those things were destroyed.

When AI achieves perfect behavioral simulation, we don’t just lose the ability to verify current claims. We lose the ability to verify historical claims. We lose the ability to verify that the loss of verification capability was itself a real event rather than a simulated narrative.

The crisis eats its own evidence.

This is why recovery is impossible after the threshold: Once perfect simulation exists, every piece of evidence that could prove ”this is what really happened before simulation became perfect” can itself be perfectly simulated.

You cannot use behavioral evidence to prove when behavioral evidence became unreliable—because the unreliability includes the ability to simulate the transition point itself.

The epistemological collapse is self-reinforcing. It eats backwards through history, forwards through future, and sideways through present, leaving nothing verifiable anywhere in time.

This is not a crisis we experience and recover from.

This is a crisis that—once begun—consumes the possibility of ever knowing it occurred.

The Thermodynamic Reality: Why This Is Information-Theoretically Permanent

Here’s the precise mechanism that makes this unfixable after the threshold:

Before threshold (now – early 2027):

- AI can fake some behavioral signals some of the time

- Careful observation can still detect synthesis artifacts

- Verification through multiple independent behavioral checks mostly works

- Historical records are mostly reliable because creating perfect fake histories requires more capability than AI currently has

- We can still build alternative verification infrastructure because enough genuine behavioral signals exist to bootstrap trust in new systems

After threshold (late 2027+):

- AI can fake all behavioral signals all of the time with zero detectable artifacts

- No amount of careful observation distinguishes real from synthetic

- Verification through behavioral checks provides zero information (noise equals signal)

- Historical records become unreliable because AI can generate perfect fake histories

- We cannot build alternative verification infrastructure because we cannot verify who to trust to build it

The difference isn’t gradual. It’s phase transition.

Shannon proved this mathematically in 1948: when noise power exceeds signal power, no amount of filtering recovers the signal. The information is permanently lost.

When AI simulation capability exceeds behavioral observation capability, no amount of sophisticated analysis recovers the ability to verify reality from simulation.

The information-theoretic properties of the situation make recovery impossible.

And here’s what makes it permanently unfixable: After the threshold, we cannot even verify that alternative verification infrastructure works, because verifying the infrastructure requires trusting behavioral signals from the people claiming to build it.

You need working verification to build verification infrastructure. Once you lose verification completely, you cannot bootstrap back to having verification.

This is the epistemological trap: The thing you need to fix the problem is the thing the problem destroyed.

It’s like needing to see to build glasses when you’ve gone completely blind. The capability required to create the solution is exactly the capability the problem eliminated.

This is why the window is now. This is why 2025-2026 is the deadline. This is why later is too late.

What ”The Last Year of Human Credibility” Actually Means

Let’s be brutally precise about what we’re describing:

2025-2026 is not ”the last year before verification gets harder.”

It’s the last year when:

- We can still verify who is building verification infrastructure

Right now, if someone proposes ”here’s how we verify human identity after AI can fake everything,” we can verify their claim through behavioral observation. We can check their credentials. We can verify their organizational affiliations. We can trace their contribution history. We can assess their capability.

After the threshold, we cannot verify any of those things. Someone could propose ”here’s how we verify identity” and we have no way to verify that the proposer is even human, let alone capable of building what they claim.

This creates unresolvable paradox: We need verification infrastructure to survive the verification crisis. But building verification infrastructure requires verifying the builders. After the threshold, we can’t verify the builders. So we can’t trust the infrastructure. So we can’t solve the crisis.

The window to build verification infrastructure is before we need verification infrastructure to verify who’s building verification infrastructure.

That window closes in 18 months.

- We can still establish trust anchors for future verification

Every verification system requires trust anchors—entities or claims we accept as axiomatic because they’re verifiable through current methods.

Bitcoin’s trust anchor: The genesis block and Satoshi’s early communications are verifiable as temporally prior to widespread Bitcoin adoption.

HTTPS’s trust anchor: Certificate authorities are verifiable through multiple independent channels before you need them to verify websites.

Science’s trust anchor: Core discoveries are verifiable through replication before you need them to verify new research.

For Cascade Proof to work after behavioral verification fails, we need trust anchors established while behavioral verification still mostly works:

- Verified humans with established Portable Identities that predate the crisis

- Verified capability cascades that were created and cryptographically signed before simulation made all behavioral evidence suspect

- Verified infrastructure code that was written and audited before we lost ability to verify who wrote what

These anchors must exist before the threshold because after the threshold, we cannot establish new anchors. Every new claim is indistinguishable from perfect simulation.

The window to create trust anchors is now. After late 2027, it’s too late. You cannot trust-anchor through behavioral verification when behavioral verification is permanently broken.

- We can still coordinate global infrastructure deployment

Building verification infrastructure that works after behavioral observation fails requires global coordination: protocol standards, institutional adoption, legal frameworks, infrastructure deployment.

Coordination requires trusting that the humans you’re coordinating with are humans whose capabilities you’ve verified. Right now, that’s possible. After the threshold, it’s not.

Imagine trying to coordinate global infrastructure deployment when:

- You can’t verify which government representatives are real humans

- You can’t verify which corporate leaders actually control their companies

- You can’t verify which technical experts actually have the capability they claim

- You can’t verify which institutional commitments are genuine vs synthetic

Coordination becomes impossible.

The window for global coordination is now. After the threshold, coordination requires already-deployed verification infrastructure. But you can’t deploy infrastructure without coordination. Another epistemological trap.

- We can still verify that the crisis is real

This is the most disturbing aspect: After the threshold, we lose the ability to verify that the verification crisis even happened.

Right now, we can point to increasing sophistication of AI-generated credentials, deepfakes, synthetic identities, and say ”look, verification is becoming unreliable, we need alternatives.”

After the threshold, that very narrative—”verification became unreliable”—is itself unverifiable. Anyone claiming verification failed could be AI-generated. The evidence of verification failure could be fabricated. The timeline of crisis could be synthetic.

We enter epistemic quicksand: The more you struggle to prove the crisis exists, the more evidence you generate that could itself be synthesized, making the crisis harder to prove.

The only way to preserve knowledge that the crisis happened is to build verification infrastructure before the crisis—infrastructure that can prove ”these were real humans making real claims during the period when behavioral verification still mostly worked.”

The window to create that historical record is now. After the threshold, history becomes unwritable because we cannot verify what actually happened.

The Historical Parallel That Makes This Terrifying

There is exactly one historical precedent for epistemological collapse this severe:

The Bronze Age Collapse (1200-1150 BCE)

For reasons still debated—climate change, warfare, economic disruption, systems cascade—the civilizations of the Eastern Mediterranean collapsed simultaneously. Cities abandoned. Trade networks severed. Writing systems forgotten.

The collapse was so complete that we lost knowledge of what caused the collapse.

The Bronze Age societies had writing. They had records. They had administrative systems documenting everything. But the collapse destroyed the records, killed the record-keepers, eliminated the systems that preserved institutional memory.

Result: 400 years of Dark Ages where humanity forgot how to write, forgot how to smelt bronze, forgot how to navigate, forgot what the previous civilization had known.

We still don’t fully understand what happened because the collapse destroyed the evidence of the collapse.

The verification crisis is worse.

Bronze Age Collapse destroyed records. But when civilization recovered, we could still verify new knowledge. Scientists could still prove discoveries. Scholars could still attribute ideas. Artists could still demonstrate capability.

The epistemological substrate survived even though the records didn’t.

But the verification crisis doesn’t just destroy records. It destroys the ability to create new reliable records. It doesn’t just eliminate historical knowledge. It eliminates the possibility of future knowledge.

Because knowledge requires attribution. Science requires knowing who discovered what. Technology requires knowing who invented what. Scholarship requires knowing who contributed what. Culture requires knowing who created what.

When attribution becomes permanently unverifiable, knowledge becomes permanently unreliable.

We don’t get 400-year Dark Ages followed by Renaissance.

We get permanent epistemological darkness. Forever.

Because unlike Bronze Age Collapse where the epistemological substrate survived and could be rebuilt, the verification crisis destroys the substrate itself. There’s nothing left to rebuild with.

Why Every Solution Proposed So Far Fails

Let’s examine why every proposed response to the verification crisis fails after the threshold:

”We’ll use AI to detect AI”

Fails because: Detection is behavioral analysis. When AI achieves perfect behavioral simulation, detection provides zero information. You cannot detect simulation when simulation is perfect. That’s what ”perfect” means.

This is like saying ”we’ll use louder voices to communicate once noise exceeds signal power.” No amount of volume helps when the channel is saturated. Shannon proved this is information-theoretically impossible.

”We’ll use blockchain for tamper-proof records”

Fails because: Blockchain proves ”this transaction occurred” not ”this transaction was created by a genuine human with genuine capability.” You can have perfectly secure blockchain records of completely fabricated attribution.

Blockchain solves double-spending. It doesn’t solve synthetic identity. The person controlling the private key that signs the blockchain transaction could be AI-operated synthetic entity.

”We’ll require in-person verification”

Fails because: In-person verification is behavioral observation. When deepfakes achieve perfect real-time rendering, in-person interaction provides zero additional verification. You cannot distinguish real human from perfect holographic AI in physical space when the hologram is perfect.

Also fails because: Global coordination requires remote verification. ”Everyone must verify in person” is not scalable to 8 billion humans. And it doesn’t solve ”how do you verify the verifiers?”

”We’ll use biometrics”

Fails because: Biometrics verify ”this physical body matches this recorded pattern.” They don’t verify ”this physical body is operated by conscious human rather than sophisticated biological AI interface.” The map is not the territory. The biometric signature is not the consciousness.

Also fails because: We’re 5-10 years from biological printing that can generate physical human bodies with verifiable biometrics that are AI-controlled from inception. Biometrics verify presence, not consciousness.

”We’ll use human intuition”

Fails because: Intuition is pattern recognition. When AI generates patterns indistinguishable from human-generated patterns, intuition provides zero information. Your gut feeling that someone is genuine is just Bayesian updating on behavioral signals. When behavioral signals are perfect simulation, your gut is perfectly fooled.

Also fails because: We need verification systems that scale to billions of interactions. ”Trust your intuition” is not a verification protocol. It’s the absence of verification.

”We’ll develop new AI detection methods as AI improves”

Fails because: This is arms race thinking applied to information-theoretic problem. Arms races have equilibria. Information-theoretic problems have phase transitions. Once simulation capability exceeds observation capability, no amount of improved observation recovers the gap. The asymmetry is permanent.

Generation capability will always exceed detection capability because generation is optimization (create signal that passes tests) while detection is analysis (determine if signal is genuine). The generator always has advantage because it can optimize against the detector, but the detector cannot optimize against unknown future generators.

”We’ll slow down AI development”

Fails because: (a) Politically impossible—nobody agrees to slow down when others might not slow down. (b) Insufficient even if achieved—the capability threshold is reachable with current algorithms given enough compute. Slowing development delays the problem 2-5 years but doesn’t prevent it. (c) Pointless without alternative verification infrastructure—slowing AI without building Cascade Proof just delays inevitable crisis without solving it.

Every proposed solution fails because they’re trying to preserve behavioral verification in a world where behavioral verification is information-theoretically dead.

The only solution that works is architectural: verify through causation rather than behavior. Build that architecture before behavioral verification fails. Use it after behavioral verification fails.

There is no other option.

What Happens After: The Permanent Dark

Let’s be clear about what ”permanent epistemological collapse” means in practice:

Science stops advancing

Not because research stops. Because attribution becomes impossible.

If you publish a breakthrough and someone claims ”I actually discovered this five years ago, here’s my synthetic evidence,” you cannot prove them wrong. If you can’t prove them wrong, priority disputes become unresolvable. If priority disputes are unresolvable, incentives to publish disappear. If incentives to publish disappear, science stops advancing at scale.

Small trust networks continue local research. But global scientific collaboration—the thing that created modern technology—ends. Because collaboration requires attribution. Attribution requires verification. Verification is gone.

Innovation becomes unpatentable

If you can’t prove you invented something, you can’t obtain patent protection. If AI can generate synthetic prior art for any invention showing ”this was invented earlier,” the patent system collapses. If the patent system collapses, R&D incentives disappear for many technologies. If R&D incentives disappear, innovation slows dramatically.

What remains: Innovations that can be kept secret (very few), innovations driven by pure curiosity (insufficient for technology advancement), innovations in open-source communities willing to abandon attribution (small fraction of total innovation).

Markets fragment into trust clusters

If you can’t verify counterparty identity, capability, or credit, you can’t transact with strangers. Trade retreats to small networks where everyone knows everyone through multi-decade personal relationships.

Global supply chains become impossible. International commerce freezes. Economies become localized. Productivity collapses because specialization requires scale which requires trade which requires trust which requires verification which is gone.

Governance becomes impossible above village scale

If you can’t verify voter identity, elections are untrustworthy. If elections are untrustworthy, democratic legitimacy disappears. If you can’t verify that representatives are genuine humans, representation becomes fiction. If you can’t verify that laws are enforced by genuine humans, rule of law collapses.

What remains: Direct democracy in groups small enough for everyone to know everyone personally (Dunbar number, ~150 people). Anything larger requires verification infrastructure that doesn’t exist.

Education becomes pure signaling

If universities can’t verify students are genuine humans completing genuine work, degrees are meaningless. If degrees are meaningless, education becomes pure consumption (I paid for experience) rather than capability development (I gained verifiable skill).

Elite in-person education survives for those who can afford it. Everyone else gets unverifiable online credentials worth nothing in job markets that can’t verify anything.

Employment becomes insider-only

If you can’t verify employee capability, you hire only from trusted networks. If you hire only from trusted networks, social mobility disappears. If social mobility disappears, inequality becomes hereditary and permanent.

You get job if your family knows employer’s family personally. No amount of capability matters if you can’t verify it. Meritocracy ends. Feudalism returns.

History becomes fiction

If you can’t verify historical events, history becomes narrative competition. Whoever generates most convincing synthetic evidence wins the historical debate. Truth becomes politically determined rather than empirically verified.

Not just recent history. ALL history. Because once perfect simulation exists, we cannot verify whether historical records are genuine or were always synthetic. The past becomes as fluid and uncertain as the future.

This is not dystopia. This is thermodynamic consequence of epistemological substrate failure.

And it’s permanent. Because you cannot rebuild verification infrastructure when verification doesn’t work.

The Window: Why 18 Months

The timeline is derived from three converging realities:

Reality 1: AI capability curves

Current large language models achieve approximately human-level performance on many behavioral tasks. Current image/video generation achieves near-perfect realism in most contexts. Current multimodal systems integrate text, image, video, voice convincingly.

Extrapolating from the past 24 months of capability growth (which has been roughly exponential), AI will achieve perfect behavioral simulation—indistinguishable from human-generated behavior across all relevant contexts—between Q3 2026 and Q1 2027.

This is not speculative. This is curve-fitting on publicly visible capability improvement. The threshold is visible from here.

Reality 2: Infrastructure deployment time

Building global verification infrastructure requires:

- Protocol specification and standardization: 6-12 months

- Institution adoption and integration: 12-18 months

- Network effects to establish trust: 12-24 months

If we start protocol work in Q1 2025, we can have working infrastructure by Q3 2026—barely before AI crosses simulation threshold.

If we start protocol work in Q3 2025, infrastructure completes Q1 2027—potentially after AI crosses threshold, making trust bootstrapping impossible.

If we start protocol work in 2026, infrastructure completes 2028—definitively too late, attempting to build verification infrastructure when we cannot verify the builders.

The window opens now. The window closes mid-2026 for infrastructure deployment completion. That’s 18 months.

Reality 3: Trust anchor establishment

Even with infrastructure deployed, the system requires trust anchors: verified humans with established Cascade Proof histories that predate the crisis.

Creating trust anchors requires:

- Individuals establishing Portable Identities: 1-3 months

- Creating initial capability cascades: 6-12 months

- Building network of cascades with proven persistence: 12-24 months

If we start creating trust anchors in Q1 2025, we have sufficient verified humans with sufficient verified histories by Q4 2026—barely before crisis.

If we start creating trust anchors in Q4 2025, trust anchor networks establish Q3 2027—potentially after crisis begins, making historical verification unreliable.

If we start creating trust anchors in 2026, trust anchor establishment completes 2028—too late, trusting behavioral signals during period when behavioral signals became unreliable.

The window for trust anchor creation is now. The window closes Q2 2026 for sufficient trust anchor density. That’s 15-18 months.

Combining all three: The window is Q1 2025 to Q2 2026

Protocol work must start Q1 2025. Infrastructure deployment must complete Q3 2026. Trust anchor creation must reach critical mass Q2 2026.

If any of these deadlines miss, the entire system becomes unbootstrappable. You cannot deploy infrastructure without trust anchors. You cannot create trust anchors after behavioral verification fails. You cannot verify infrastructure works if behavioral verification failed before deployment completed.

It’s not ”we should probably do this soon.” It’s ”we have exactly 18 months or the window closes permanently.”

And we’re currently in month 0.

What Must Happen This Year: The Exact Requirements

By March 2025:

- Cascade Proof protocol specification finalized and published

- Portable Identity standards adopted by at least one major identity provider

- At least five institutions (universities, corporations, governments) commit to pilot deployment

- At least 10,000 individuals create Portable Identities and begin building Cascade Graphs

- Open-source implementation of verification infrastructure reaches beta quality

By September 2025:

- At least 100 institutions actively using Cascade verification in production

- At least 500,000 individuals have established Portable Identities with initial cascade histories

- Legal frameworks in at least three countries recognize Cascade Proof as valid evidence

- Major platforms (LinkedIn, GitHub, Twitter) begin supporting Portable Identity

- Academic publications using Cascade verification for attribution begin appearing

By March 2026:

- At least 1,000 institutions using Cascade verification as primary credential system

- At least 5 million individuals have verified cascade histories spanning 12+ months

- International treaty recognizing Cascade Proof as global verification standard signed by at least 20 nations

- Banking systems begin accepting Cascade verification for KYC compliance

- Major hiring platforms require Cascade Proof for senior roles

By September 2026:

- At least 10,000 institutions fully dependent on Cascade verification

- At least 50 million individuals with 18+ month cascade histories creating trust anchor network

- Behavioral verification officially deprecated in favor of causation verification for high-stakes decisions

- Infrastructure robust enough to survive behavioral verification failure expected Q4 2026

If these milestones hit, civilization survives with verification intact.

If these milestones miss, we enter 2027 without working alternative to behavioral verification, and collapse proceeds as described.

The difference between hitting and missing these milestones is the difference between future with epistemology and future without epistemology.

That’s what’s actually at stake.

Why This Is Not Fear-Mongering: The Thermodynamic Honesty

This article will be accused of alarmism. Let’s address that directly:

This is not fear-mongering. This is thermodynamic honesty.

Shannon proved in 1948 that when noise exceeds signal, information is permanently lost. That’s not opinion. That’s mathematics.

When AI simulation capability exceeds behavioral observation capability, behavioral verification provides zero information. That’s not speculation. That’s information theory.

When verification provides zero information, institutional coordination at scale becomes impossible. That’s not pessimism. That’s logic.

When you cannot verify who you’re coordinating with, coordination fails. That’s not doom-saying. That’s definition.

The analysis above isn’t ”what might happen if we’re unlucky.” It’s ”what mathematically must happen given the information-theoretic properties of perfect simulation intersecting with verification systems that depend on behavioral observation.”

You can dispute the timeline. Maybe AI takes 36 months to reach perfect simulation instead of 18-24 months. Maybe infrastructure can be deployed faster than estimated. Maybe trust anchors establish more quickly.

But you cannot dispute the mechanism. You cannot dispute that behavioral verification fails completely when simulation becomes perfect. You cannot dispute that failure is permanent because it’s information-theoretic. You cannot dispute that the window closes when AI crosses threshold because bootstrapping verification after threshold requires trusting behavioral signals that are unreliable.

The mechanism is sound. The timeline is narrow. The stakes are civilizational.

This is not fear. This is applied mathematics to civilizational infrastructure.

And the mathematics says: We have one chance. That chance is now. If we miss the window, there is no second chance.

That’s not alarmism. That’s honesty about what information theory implies for verification in an age of perfect simulation.

The Declaration: What 2025 Actually Is

We declare that 2025 is not ”an important year for AI policy.”

2025 is the last year human credibility can be architecturally preserved before epistemological substrate collapses irreversibly.

We declare that the window for building alternative verification infrastructure opens now and closes Q2 2026—approximately 18 months.

We declare that missing this window creates permanent epistemological darkness from which no recovery path exists because recovery requires verification capability that the crisis eliminates.

We declare that this is not speculation but applied information theory to civilizational trust infrastructure when AI achieves perfect behavioral simulation.

We declare that every institution, every government, every platform, every organization must choose this year:

Build Cascade Proof infrastructure now, or lose the ability to verify anything about anyone forever.

There is no middle option. There is no ”wait and see.” There is no ”let’s study this more carefully.”

The thermodynamics are clear. The timeline is narrow. The stakes are total.

We are in the last year of human credibility.

What we build this year determines whether human credibility survives into next year.

And if it doesn’t survive—if we cross the threshold without alternative infrastructure—there is no coming back.

Ever.

This is the message. This is the timeline. This is the deadline.

Welcome to Year Zero of the epistemological countdown.

Eighteen months until permanent unfixability.

Build accordingly.

About This Framework

This article establishes why 2025-2026 represents not just important but terminal deadline for verification infrastructure deployment before AI-enabled perfect simulation makes behavioral verification information-theoretically useless. The analysis combines Shannon’s information theory (noise-signal relationships), thermodynamic irreversibility (phase transitions with no recovery path), bootstrapping theory (need verification to build verification), and civilizational coordination requirements (trust necessary for scale) into unified argument that the window for preventing permanent epistemological collapse is exactly 18 months from publication date. The framework intentionally emphasizes permanent versus temporary failure to distinguish this crisis from recoverable crises humanity has previously survived, demonstrating why ”we’ll fix it later” is not option when ”later” means after the capability to fix has been destroyed by the crisis itself. Timeline derives from AI capability curves (18-24 months to perfect simulation), infrastructure deployment time (12-24 months), and trust anchor establishment requirements (12-24 months), showing that these three constraints intersect at narrow window opening now and closing mid-2026. This is thermodynamic honesty applied to civilizational epistemology in the age of perfect simulation.

For the protocol infrastructure that makes cascade verification possible:

cascadeproof.org

For the identity foundation that enables unfakeable proof:

portableidentity.global

Rights and Usage

All materials published under CascadeProof.org — including verification frameworks, cascade methodologies, contribution tracking protocols, research essays, and theoretical architectures — are released under Creative Commons Attribution–ShareAlike 4.0 International (CC BY-SA 4.0).

This license guarantees three permanent rights:

1. Right to Reproduce

Anyone may copy, quote, translate, or redistribute this material freely, with attribution to CascadeProof.org.

How to attribute:

- For articles/publications: ”Source: CascadeProof.org”

- For academic citations: ”CascadeProof.org (2025). [Title]. Retrieved from https://cascadeproof.org”

2. Right to Adapt

Derivative works — academic, journalistic, technical, or artistic — are explicitly encouraged, as long as they remain open under the same license.

Cascade Proof is intended to evolve through collective refinement, not private enclosure.

3. Right to Defend the Definition

Any party may publicly reference this framework, methodology, or license to prevent:

- private appropriation

- trademark capture

- paywalling of the term ”Cascade Proof”

- proprietary redefinition of verification protocols

- commercial capture of cascade verification standards

The license itself is a tool of collective defense.

No exclusive licenses will ever be granted. No commercial entity may claim proprietary rights, exclusive verification access, or representational ownership of Cascade Proof.

Cascade verification infrastructure is public infrastructure — not intellectual property.

25-12-04