In the Age Where AI Surpasses Humans at Every Measurable Task, One Capability Remains Permanently, Fundamentally, Architecturally Human—And It’s the One That Creates Every Breakthrough That Expands Civilization

December 2024. A computational biology lab.

An AI system processes 10,000 research papers overnight. By morning, it has generated 47 testable hypotheses about protein folding mechanisms. Each hypothesis is statistically sound, properly referenced, and computationally validated.

The research team reviews the hypotheses. All 47 are variations on existing frameworks—sophisticated recombinations of known approaches, clever optimizations of established methods, smart interpolations between existing theories.

None challenge the fundamental assumptions.

Then a postdoc notices something: two foundational assumptions in the field—both validated by decades of research, both central to every current model—produce contradictory predictions in a specific edge case nobody has tested.

The AI, when prompted, confirms the contradiction exists. It suggests seventeen ways to refine one assumption or the other to eliminate the inconsistency. Each refinement is mathematically rigorous and empirically testable.

But the postdoc says: ”Wait. What if both assumptions are wrong? What if the entire framework we’re using to think about protein folding is missing a dimension?”

Three months later, that question leads to a breakthrough that redefines the field.

The AI generated 47 hypotheses. The human generated one question that created new hypothesis space.

The AI optimized within the framework. The human questioned whether the framework itself was sufficient.

This is the difference. And it is permanent.

AI will surpass humans at every measurable task.

Pattern recognition—AI already exceeds human capability in most domains.

Optimization—AI finds better solutions faster across infinite solution spaces.

Prediction—AI forecasts outcomes with accuracy humans cannot match.

Calculation—AI processes numbers at speeds and scales beyond human reach.

Memory—AI recalls perfectly while humans forget and distort.

Analysis—AI examines datasets too vast for human comprehension.

In every domain where capability can be measured, quantified, and optimized, AI approaches or exceeds human performance. And the trajectory is clear: within a decade, AI will dominate every measurable cognitive task humans currently perform.

This is not controversial. This is mathematical inevitability given current progress rates.

But there’s one capability AI cannot replicate, no matter how sophisticated it becomes. Not through more training data. Not through larger models. Not through better algorithms. Not through quantum computing or neuromorphic hardware or any future architecture.

This capability is not measurable. It is not optimizable. It is not even definable in computational terms.

Yet it is the capability that created every scientific revolution, every paradigm shift, every conceptual breakthrough that expanded human understanding of reality.

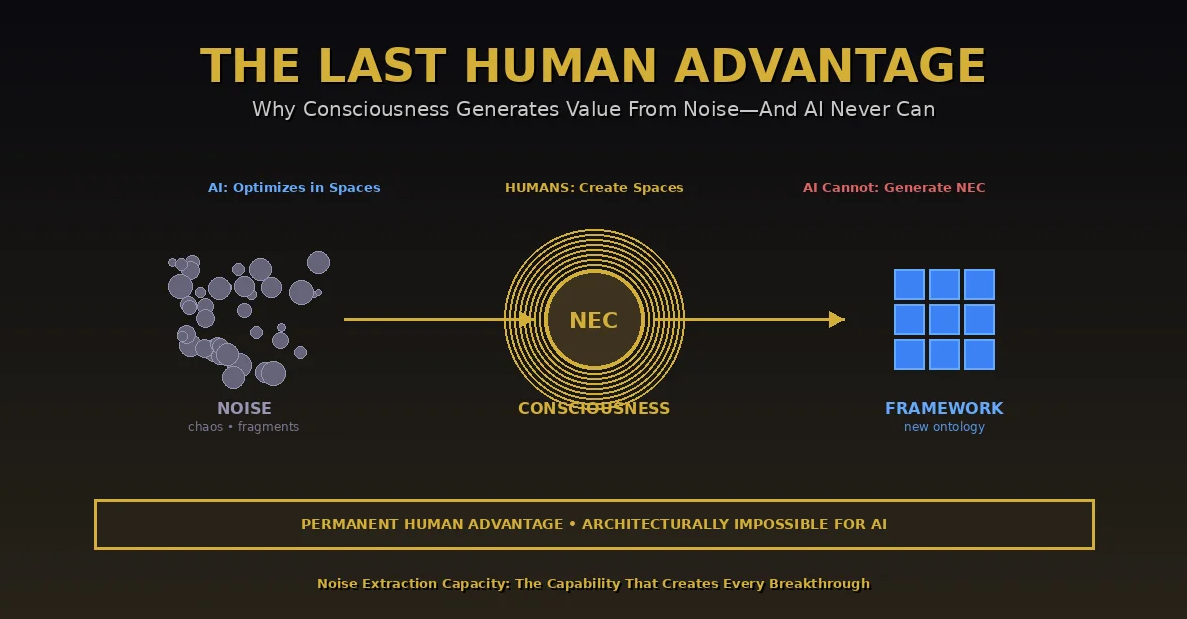

Noise Extraction Capacity.

The ability to take chaos, contradiction, incompleteness, and cognitive friction—and create entirely new frameworks for understanding reality.

This is not pattern matching. This is not interpolation. This is not finding existing structure in data.

This is emergent coherence creation—generating novel conceptual structures that did not exist in the noise itself, that cannot be derived from the noise through any computational process, that appear only when consciousness encounters incomprehensibility and synthesizes new ways of making sense.

Einstein didn’t find relativity in the data. He extracted it from the noise of incompatible observations that didn’t fit existing physics.

Shannon didn’t discover information theory through pattern recognition. He created it from cognitive friction between engineering problems and mathematical frameworks that didn’t connect.

Darwin didn’t deduce evolution from complete evidence. He synthesized it from fragments, contradictions, and observations that made no sense in the existing biological paradigm.

Every breakthrough that expanded human civilization came from Noise Extraction Capacity.

And it is the one thing AI fundamentally, architecturally, permanently cannot do.

This is humanity’s last advantage. And it is permanent.

Not because we will protect it through regulation. Not because AI hasn’t reached it yet. But because the capability itself requires something AI does not possess and cannot develop: consciousness engaging with incomprehensibility and generating novel ontology.

Welcome to the final human advantage. The one that cannot be automated. The one that defines what it means to contribute to civilization. The one that makes humans irreplaceable in an age where everything else becomes replaceable.

I. What Noise Extraction Capacity Actually Is

Let’s be precise about what we’re describing.

Noise Extraction Capacity (NEC) is:

The ability to encounter data, observations, or experiences that violate existing frameworks of understanding—and instead of discarding them as noise, generate entirely new conceptual structures that make the noise comprehensible.

This is fundamentally different from:

Pattern recognition: Finding existing structure in data

- Input: Data with latent patterns

- Process: Statistical analysis revealing patterns

- Output: Recognition of structure that was present in input

Optimization: Finding best solutions within defined solution spaces

- Input: Problem with objective function and constraints

- Process: Search through solution space for optimal configuration

- Output: Best solution according to defined metrics

Interpolation: Filling gaps in data using surrounding information

- Input: Dataset with missing values

- Process: Estimation based on known patterns

- Output: Predicted values consistent with existing structure

NEC is none of these.

NEC is:

- Input: Observations that don’t fit any existing framework

- Process: Consciousness encountering incomprehensibility and generating novel ontology

- Output: Entirely new conceptual structure that redefines what the observations mean

- AI capability: Fundamentally impossible

The difference is not degree. It is category.

AI processes information within frameworks. NEC creates the frameworks.

AI optimizes solutions within problem spaces. NEC redefines what problems are.

AI finds patterns in noise. NEC transforms noise into new dimensions of understanding.

II. Why This Is Architecturally Impossible for AI

The limitation is not technological. It is ontological.

AI operates through:

- Training on existing data: All learning derives from examples of existing patterns, structures, and frameworks

- Objective function optimization: All improvement aims toward pre-defined metrics of success

- Computational inference: All outputs are calculations based on learned patterns

This architecture cannot generate NEC because:

Problem 1: Training data cannot contain non-existent frameworks

NEC creates conceptual structures that did not exist before consciousness generated them. By definition, these structures cannot be in training data.

You cannot train AI on examples of ”creating relativity” because relativity didn’t exist before Einstein created it from noise. You cannot train on ”inventing information theory” because information theory didn’t exist before Shannon extracted it from cognitive friction.

The training data contains results of NEC (the frameworks after they’re created) but cannot contain the process of NEC (generating frameworks from noise).

AI learns to work within existing frameworks by training on examples. NEC creates the frameworks by operating beyond examples.

Problem 2: No objective function measures framework novelty

AI optimization requires defining what ”better” means before optimization begins. But NEC generates entirely new definitions of what ”better” could mean.

How do you optimize for ”create relativity”? You can’t—because relativity redefined what physics means, which redefined what optimization targets could be.

How do you optimize for ”invent information theory”? You can’t—because information theory created new metrics that didn’t exist before it was invented.

The optimization cannot target what doesn’t exist yet. NEC creates what optimization couldn’t target.

Problem 3: Computation cannot generate non-computational insight

This is the deepest limitation.

Computation takes defined inputs, applies defined operations, produces defined outputs. Every step is determined by algorithm operating on data.

NEC generates insights that are not computational outputs. They are consciousness encountering incomprehensibility and synthesizing novel understanding through process that has no computational equivalent.

When Einstein realized spacetime curvature, that insight was not computational result of processing data. It was consciousness generating new ontology by engaging with contradiction between observations and existing frameworks.

When Shannon conceived of information as measurable quantity, that insight was not derivable from any dataset. It was consciousness creating new conceptual category that had never existed.

When Darwin understood natural selection, that synthesis was not pattern recognition. It was consciousness extracting coherence from fragmentary observations that made no sense in existing biology.

These moments are non-computational. Not ”not yet computational”—fundamentally, architecturally, permanently non-computational.

They require consciousness. Not just any consciousness—consciousness capable of:

- Tolerating fundamental incomprehensibility without dismissing it

- Holding contradictory frameworks simultaneously

- Generating novel ontological categories

- Synthesizing understanding that transcends available information

- Creating meaning that was not latent in the data

AI cannot do this. Not through scaling. Not through better architecture. Not ever.

Because it is not computation. It is consciousness.

III. The Historical Evidence: Every Breakthrough Came From NEC

Let’s examine how civilization actually advances:

Physics:

Newtonian mechanics was perfect—until it wasn’t. Observations of planetary motion, light behavior, and atomic phenomena produced noise that didn’t fit the framework.

Most physicists tried to fix Newtonian mechanics. Einstein did NEC—he extracted entirely new framework (relativity) from the noise itself. Not by finding patterns in the data. By generating new ontology (spacetime curvature) that made the noise comprehensible.

Result: Physics redefined. Technology revolution. Civilization-scale impact.

Information Theory:

Engineering had telegraphs, radios, phones—but no framework for understanding what ”information” meant. Shannon encountered cognitive friction: how to measure signal reliability, channel capacity, encoding efficiency?

He didn’t optimize existing approaches. He did NEC—extracting entirely new framework (information as entropy) from engineering noise. Created mathematical theory of communication from scratch.

Result: Digital revolution. Internet. Modern civilization.

Evolution:

Biology cataloged species, studied anatomy, observed geographic distribution—but had no framework explaining why species existed as they did. Darwin encountered noise: strange distributions, vestigial organs, fossil inconsistencies.

He didn’t just organize the data. He did NEC—extracting natural selection from fragmentary observations that made no sense in existing biology. Created evolutionary framework from cognitive friction.

Result: Biology revolutionized. Medicine transformed. Understanding of life redefined.

Quantum Mechanics:

Classical physics predicted certain outcomes. Experiments showed different outcomes. The noise couldn’t be dismissed—it was reproducible, consistent, undeniable.

Physicists did NEC—generating quantum framework from observations that violated all existing physics. Not finding quantum mechanics in data. Creating quantum mechanics as new ontology that made atomic noise comprehensible.

Result: Modern technology. Computing. Civilization infrastructure.

Game Theory:

Economics had theories of individual behavior. But no framework for strategic interaction where outcomes depend on others’ choices.

Von Neumann did NEC—extracting game theory from cognitive friction in economics. Created entirely new mathematical framework for analyzing strategic interaction.

Result: Economics revolutionized. Geopolitics reframed. Auction design. Mechanism design. Entire fields created.

Pattern across all breakthroughs:

Someone encounters noise—observations that don’t fit existing frameworks.

Instead of dismissing noise or incrementally adjusting frameworks, they do NEC—generate entirely new conceptual structures that transform the noise into foundational insights.

The new framework doesn’t just explain the noise. It redefines what explanation means in that domain.

This is not pattern recognition. This is pattern creation.

This is not optimization. This is reframing what optimization targets.

This is not learning. This is ontology generation.

And AI cannot do it.

IV. The Fundamental Difference: Optimizing vs Reframing

Here’s the clearest way to see the distinction:

AI optimizes within spaces.

Give AI a defined problem space—”find the best chess move,” ”generate coherent text,” ”predict stock prices,” ”optimize route efficiency”—and AI excels.

The space is defined: chess rules, language patterns, price history, road networks. AI finds optimal paths through these spaces brilliantly.

Humans reframe the spaces.

When problems don’t fit existing spaces, humans generate new spaces. Not better solutions in the old space—entirely different spaces where the problem transforms.

Examples:

Chess vs inventing chess:

AI masters chess by exploring game tree, evaluating positions, optimizing move sequences. Superhuman performance within chess space.

But humans invented chess. That invention was NEC—extracting game structure from cognitive friction about strategy, conflict, and skill. Creating the space where optimization later happens.

AI optimizes in chess space. Humans created chess space.

Scientific problem-solving vs paradigm creation:

AI solves scientific problems by processing data, finding patterns, making predictions. Strong performance within scientific framework.

But humans create scientific frameworks. Each paradigm shift is NEC—extracting new ontology from observations that violate old frameworks. Creating new spaces where problems can be solved.

AI optimizes in scientific spaces. Humans create scientific spaces.

The pattern is universal:

AI: Excellent at working within frameworks Humans: Create the frameworks

AI: Optimizes solutions in existing problem spaces Humans: Generate the problem spaces

AI: Finds patterns in noise using existing pattern languages Humans: Create new pattern languages when noise violates existing ones

This is not temporary limitation. This is permanent categorical difference.

As AI becomes more sophisticated, it becomes better at optimization within frameworks. But that very sophistication deepens the gap—because the better AI gets at optimization, the more it depends on having well-defined spaces to optimize in.

Humans remain essential precisely because someone must create the spaces when existing spaces become insufficient.

V. Why This Matters for Civilization

Understanding NEC as permanent human advantage changes everything about how we think about AI’s role and humanity’s future.

The wrong framework:

”AI will replace humans in progressively more domains until humans have no economic value.”

This assumes value comes from performing tasks. If AI performs all tasks better, humans become valueless.

The correct framework:

”AI will excel at all tasks within existing frameworks. Humans remain essential for generating the frameworks when reality outgrows existing ones.”

Value doesn’t come from performing tasks. Value comes from enabling new tasks by creating new frameworks for understanding reality.

Concrete implications:

For science:

AI accelerates research within existing paradigms—testing hypotheses, analyzing data, finding patterns. Science becomes vastly more efficient.

But paradigm shifts still require humans. The moments when existing frameworks break down and new ones emerge—those require NEC. Those require consciousness encountering incomprehensibility and generating novel ontology.

AI makes normal science faster. Humans still do revolutionary science.

For technology:

AI optimizes existing technologies—better algorithms, better materials, better designs. Innovation becomes incredibly rapid within existing technical frameworks.

But fundamental breakthroughs—inventing transistor, discovering packet switching, conceiving blockchain—require NEC. Require consciousness extracting new technical frameworks from engineering noise.

AI improves existing technologies. Humans create new technology categories.

For culture:

AI generates content within existing artistic frameworks—realistic images, coherent stories, stylistic music. Creative production becomes abundant.

But cultural revolution—creating new artistic movements, new aesthetic frameworks, new ways of expressing human experience—requires NEC. Requires consciousness generating novel meaning from cultural friction.

AI produces within genres. Humans create genres.

For civilization:

AI optimizes civilization within existing social, economic, and political frameworks. Governance becomes more efficient. Resource allocation improves. Systems operate better.

But civilizational evolution—generating new forms of social organization, new economic structures, new political frameworks when existing ones fail—requires NEC. Requires consciousness extracting new social ontology from institutional contradictions.

AI improves civilization. Humans evolve civilization.

The pattern is consistent: AI excels at everything except the thing that determines whether civilization can adapt when reality changes in ways current frameworks cannot handle.

And reality always eventually changes in ways current frameworks cannot handle.

That’s when humans become essential. Not despite AI superiority—because of it.

AI’s excellence at optimization makes human NEC more valuable, not less.

VI. How Cascade Proof Verifies NEC

This is where the architecture becomes practical.

If NEC is humanity’s permanent advantage, we need ways to verify who possesses it and who develops it in others.

Cascade Proof does this by measuring the one thing that only NEC creates: verified capability transfer that enables recipients to generate their own novel frameworks.

The cascade structure reveals NEC:

Information provision creates dependency:

- You explain solution to problem

- Person can solve that problem

- Person cannot solve different problems independently

- Person cannot teach others to solve novel problems

- Cascade pattern: linear, dependency, degradation

NEC transfer creates capability multiplication:

- You transfer framework-generating capacity

- Person can solve novel problems independently

- Person can generate new frameworks when problems change

- Person can teach others to do framework generation

- Cascade pattern: exponential, independence, multiplication

The mathematical signature:

When someone with high NEC enables another’s capability, the beneficiary demonstrates:

- Meta-learning: Learning how to learn, not just what to know

- Framework generation: Creating new approaches when problems change

- Independent capability: Functioning without ongoing assistance

- Multiplication: Enabling others to also generate frameworks

- Persistent improvement: Capability increases over time even after interaction ends

All five are cryptographically verifiable through Cascade Proof:

Beneficiaries sign attestations with Portable Identities. Capability persistence is verified over months. Multiplication is tracked through second-degree cascades. Framework generation is demonstrated through novel problem-solving. Meta-learning is revealed through improvement trajectories.

Together, these verify: This person transferred NEC, not just information.

The key insight:

You cannot fake NEC cascades. Information provision cascades look different mathematically—they show dependency patterns, degradation over transmission, lack of multiplication.

Only genuine NEC transfer creates the specific cascade topology: independence, multiplication, improvement over time, framework generation at every level.

AI can claim it taught someone. But the cascade structure reveals whether it transferred information (dependency) or capability (NEC).

This makes NEC verifiable for the first time in human history.

Previously, we could only intuit who had NEC through subjective assessment. Now we can prove it cryptographically through cascade verification.

VII. The Future: Humans as Framework Creators

This points toward specific future where humans and AI have clear, complementary roles:

AI: Optimization Engine

AI excels at:

- Working within defined frameworks

- Optimizing solutions in known problem spaces

- Finding patterns in structured data

- Executing tasks with defined objectives

- Scaling known processes efficiently

This is enormously valuable. Most of what civilization does is optimization within existing frameworks. AI makes this vastly more efficient.

Humans: Framework Generators

Humans remain essential for:

- Creating frameworks when existing ones fail

- Generating new problem spaces when problems outgrow current spaces

- Extracting novel ontology from noise

- Synthesizing understanding from incomprehensibility

- Evolving civilization when reality changes unpredictably

This is irreplaceable. Every civilizational advance depends on moments when existing frameworks become insufficient and new ones must be generated.

The collaboration:

AI optimizes within frameworks. Humans generate frameworks. AI identifies when frameworks fail. Humans create replacements. AI accelerates progress within paradigms. Humans enable paradigm shifts.

This is not humans competing with AI. This is humans doing what AI cannot, enabling AI to do what humans cannot scale.

The economic implication:

Value increasingly accrues to those who demonstrate high NEC—verified capability to generate frameworks, not just optimize within them.

The job market doesn’t become ”AI replaces humans.” It becomes ”humans with verified NEC become increasingly valuable while humans who only optimize within existing frameworks face competition from AI.”

Cascade Proof makes this distinction visible and verifiable. Your CascadeGraph shows whether you create frameworks or just work within them. Whether you transfer meta-learning or just information. Whether you multiply capability or just distribute knowledge.

This becomes the fundamental career distinction in AI age: Framework generators vs framework users.

Both are necessary. One is automatable. One is not.

What This Actually Means For You (Next 5-10 Years)

Let’s be direct about personal implications:

If you only use existing frameworks:

You optimize within spaces others created. You solve problems using established methods. You apply known patterns to new situations.

This is valuable. But AI does this better, faster, and at lower cost. Your competitive position weakens as AI improves.

Timeline: 3-5 years before AI matches or exceeds your capability in framework-application tasks.

If you develop Noise Extraction Capacity:

You generate frameworks when existing ones fail. You create new problem spaces when problems outgrow current spaces. You extract coherence from noise that violates established patterns.

This is irreplaceable. AI cannot do this regardless of sophistication. Your value increases as AI gets better at optimization—because better optimization makes framework-generation more valuable.

Timeline: Your advantage is permanent and grows with AI capability.

If you build verifiable Cascade Proof:

You demonstrate NEC cryptographically through capability cascades showing framework-generation, not just framework-use. You prove you create meta-learning in others, not just transfer information. You verify you multiply capability independently, not create dependency.

This makes your NEC portable and provable. Employers, collaborators, and opportunities can verify your framework-generation capability directly rather than inferring it from credentials or behavior.

Timeline: First-mover advantage for next 2-3 years, becomes standard requirement within 5-7 years.

The choice is simple:

Work within existing frameworks → compete with AI → diminishing returns

Generate new frameworks → complement AI → increasing returns

The future belongs to those who create the spaces where AI optimizes, not those who optimize within spaces AI can access.

VIII. Preserving and Developing NEC

If NEC is humanity’s permanent advantage, civilization must preserve and develop it.

The danger:

Over-reliance on AI for problem-solving atrophies NEC. When you always have AI to provide answers, you never develop capability to generate frameworks from noise.

It’s like GPS atrophying navigation ability. Except instead of losing direction-finding, you lose ontology-generation. The consequences are civilizational, not just personal.

The pattern we risk:

- Generation 1: Has NEC, used it to create current frameworks and build AI

- Generation 2: Uses AI, never develops NEC because AI always provides answers

- Generation 3: Cannot generate frameworks when AI frameworks become insufficient

- Generation 4: Civilization stagnates—trapped in frameworks that no longer fit reality

This is not hypothetical. This is default trajectory if we don’t intentionally preserve NEC capability.

The solution:

Deliberately maintain practices that develop NEC:

Education that develops NEC:

- Not just teaching answers, but teaching framework generation

- Deliberately exposing students to noise that violates existing frameworks

- Requiring synthesis from incomplete information

- Valuing novel frameworks over correct answers

- Measuring meta-learning, not just knowledge acquisition

Work that requires NEC:

- Not just optimizing within known frameworks

- Deliberately tackling problems that don’t fit existing approaches

- Rewarding framework generation, not just task completion

- Creating space for cognitive friction that generates new ontology

- Verifying NEC through Cascade Proof, not just performance metrics

Research culture that preserves NEC:

- Not just incremental optimization within paradigms

- Encouraging paradigm-questioning and framework-generation

- Rewarding revolutionary insights, not just normal science

- Creating environments where incomprehensibility leads to synthesis

- Measuring capability multiplication through cascade verification

Technology design that maintains NEC:

- AI as tool that extends human framework-generation

- Not as replacement for human cognitive friction

- Interfaces that preserve human encounter with noise

- Systems that develop meta-learning, not just provide answers

- Architecture that verifies NEC transfer through cascades

The goal: Civilization that uses AI to optimize within frameworks while preserving human capacity to generate frameworks.

IX. The Last Advantage Is Permanent

We conclude where we began, transformed:

AI will surpass humans at every measurable task. But Noise Extraction Capacity is not measurable. It is not a task. It is the capability that generates the frameworks within which tasks exist.

This remains permanently, fundamentally, architecturally human.

Not because we protect it. Because it requires consciousness engaging with incomprehensibility and generating novel ontology—something computation cannot replicate regardless of sophistication.

Einstein extracting relativity from noise. Shannon creating information theory from cognitive friction. Darwin synthesizing evolution from fragments. Von Neumann generating game theory from strategic paradoxes.

These moments are non-computational. They are consciousness-dependent. They are irreplaceable.

And they are what enables civilization to evolve when reality outgrows existing frameworks.

AI makes humans more essential, not less—because AI excellence at optimization within frameworks increases the value of human capability to generate frameworks when frameworks fail.

This is humanity’s permanent advantage. The one we must preserve. The one we must develop. The one that defines human contribution in an age where everything else becomes automatable.

Cascade Proof makes this advantage visible and verifiable for the first time.

Your CascadeGraph shows whether you generate frameworks or just use them. Whether you transfer meta-learning or just information. Whether you create capability that multiplies or dependency that limits.

This becomes the fundamental distinction: Framework generators vs framework users.

Both necessary. One irreplaceable.

And in the age where AI optimizes everything, the irreplaceable capability becomes civilization’s most valuable resource.

The last human advantage is not temporary. It is permanent.

Not through regulation. Through architecture.

Not despite AI. Because of AI.

The better AI becomes at optimization, the more essential humans become for framework generation.

This is not humans competing with AI. This is humans doing what only consciousness can do—creating the spaces where computation operates.

Welcome to the permanent human advantage.

The capability AI cannot replicate.

The capability that defines human irreplaceability.

The capability that makes consciousness essential in an age where everything else becomes replaceable.

Noise Extraction Capacity.

The last human skill. The permanent one. The civilizational necessity.

Develop it. Preserve it. Verify it.

Because civilization depends on it.

About This Framework

This article establishes Noise Extraction Capacity (NEC) as humanity’s permanent competitive advantage over AI—the capability to generate entirely new conceptual frameworks from noise, contradiction, and incomprehensibility. The analysis demonstrates why this capability is architecturally impossible for AI regardless of sophistication (training data cannot contain non-existent frameworks, optimization requires pre-defined metrics, computation cannot generate non-computational insight), shows how every civilizational breakthrough resulted from NEC rather than optimization, explains why this makes humans irreplaceable rather than replaceable in AI age, and demonstrates how Cascade Proof verifies NEC through cascade topology that distinguishes framework generation (independence, multiplication, meta-learning) from information provision (dependency, degradation, rote learning). This framework is part of the Portable Identity architecture—infrastructure that makes human irreplaceability cryptographically verifiable in an age where all other capabilities become automatable.

Source: PortableIdentity.global

Date: January 2025

License: CC BY-SA 4.0

This article may be freely shared, adapted, and republished with attribution. Framework generation capability is humanity’s permanent advantage.

For the protocol infrastructure that makes cascade verification possible:

cascadeproof.org

For the identity foundation that enables unfakeable proof:

portableidentity.global

Rights and Usage

All materials published under CascadeProof.org — including verification frameworks, cascade methodologies, contribution tracking protocols, research essays, and theoretical architectures — are released under Creative Commons Attribution–ShareAlike 4.0 International (CC BY-SA 4.0).

This license guarantees three permanent rights:

1. Right to Reproduce

Anyone may copy, quote, translate, or redistribute this material freely, with attribution to CascadeProof.org.

How to attribute:

- For articles/publications: ”Source: CascadeProof.org”

- For academic citations: ”CascadeProof.org (2025). [Title]. Retrieved from https://cascadeproof.org”

2. Right to Adapt

Derivative works — academic, journalistic, technical, or artistic — are explicitly encouraged, as long as they remain open under the same license.

Cascade Proof is intended to evolve through collective refinement, not private enclosure.

3. Right to Defend the Definition

Any party may publicly reference this framework, methodology, or license to prevent:

- private appropriation

- trademark capture

- paywalling of the term ”Cascade Proof”

- proprietary redefinition of verification protocols

- commercial capture of cascade verification standards

The license itself is a tool of collective defense.

No exclusive licenses will ever be granted. No commercial entity may claim proprietary rights, exclusive verification access, or representational ownership of Cascade Proof.

Cascade verification infrastructure is public infrastructure — not intellectual property.