For most of human history, evidence existed before it could be verified. A witness testified. A document surfaced. An expert rendered opinion. The evidence was present—the question was whether courts would admit it, juries would believe it, or institutions would accept it.

That relationship inverted within a single generation. Evidence now exists only after it passes through verification infrastructure that decides what becomes discoverable. Not through censorship—nothing is removed. Not through manipulation—nothing is altered. But through architectural control over what can be found when sought, what appears when needed, what counts as existing in civilizational discourse.

This infrastructure—search engines and their algorithmic descendants—has quietly assumed judicial power that no court, no government, no institution was ever granted: the power to determine not just which evidence is reliable, but which types of evidence can exist as evidence at all.

This is not conspiracy. This is architecture becoming authority. And it matters because when artificial intelligence makes all behavioral signals synthesizable, whoever controls the verification surface controls what civilization can know about itself.

I. Verification Surface: The Infrastructure Nobody Named

Every civilization requires verification infrastructure—systems that determine what counts as proof. In oral societies, tribal councils served this function. In written societies, religious authorities and their texts. In modern societies, courts, universities, credential-issuing bodies.

But digital civilization created something unprecedented: a verification surface—the technical layer where evidence becomes discoverable to those seeking proof.

The verification surface is not content. It is not storage. It is the architectural layer that makes certain types of proof appear as legitimate evidence while rendering others effectively non-existent through non-discoverability.

Consider how verification actually functions in digital civilization:

An employer evaluating a candidate does not request references directly. They search the candidate’s name. The search results—what appears, in what order, with what prominence—constitutes the verification surface. Evidence that exists but does not appear on this surface functionally does not exist for verification purposes.

A court investigating identity fraud does not examine documents first. They search for digital footprints. The searchable presence—or absence—determines what evidence can be entered into legal proceedings. Unsearchable evidence may as well not exist.

A researcher checking citation legitimacy does not read papers directly. They search citation patterns through algorithmic infrastructure. What appears in ranked results determines which work counts as legitimate contribution.

The pattern is universal: verification happens through search, and search results constitute the practical definition of what evidence exists. This makes the architecture controlling search results the de facto arbiter of evidence—without that architecture ever claiming such authority, without society explicitly granting such power, and without any oversight mechanism governing its exercise.

This is verification surface capture: when infrastructure designed for information retrieval becomes the practical Supreme Court of what counts as proof.

II. How Search Infrastructure Became Judicial Infrastructure

The transfer of judicial power to search infrastructure was gradual, unintentional, and irreversible.

Initially, search engines were utilities—they helped users find content that existed. Their role was discovery, not verification. If content existed, search helped locate it. The infrastructure was neutral regarding what should exist or what should count as evidence.

But as digital content exploded, search engines necessarily became filters. Not all content could appear in limited result spaces. Ranking became essential. And ranking required criteria.

The criteria that emerged—backlinks, domain authority, engagement metrics, freshness signals—were proxies for quality. Content with many citations from established sources was probably legitimate. Domains with long histories and high engagement were probably trustworthy. Fresh content from active sources was probably relevant.

These proxies worked adequately when creating proxy signals was expensive relative to creating genuine quality. Acquiring legitimate backlinks required creating content others found worth citing. Building domain authority required sustained contribution over time. Generating engagement required providing value that attracted attention.

The economic gradient favored authenticity: it was cheaper to be legitimate than to fake legitimacy convincingly.

This made search infrastructure function reasonably well as verification infrastructure. Rankings correlated with quality because quality proxies were reliable. Trust signals corresponded to trustworthiness because trust was expensive to fake. Authority indicators tracked genuine authority because authority required demonstrated capability over time.

Courts began citing search results as evidence. Universities used search rankings to evaluate research impact. Employers relied on searchable presence to verify candidate claims. Governments used indexed content to establish facts.

Search infrastructure became judicial infrastructure not through explicit transfer of power but through civilizational dependency on rankings that mostly corresponded to reality.

Nobody noticed the threshold being crossed because the proxies still worked.

III. The Entropy Threshold: When Synthesis Inverted the Economic Gradient

AI synthesis crossed a threshold in recent years that broke the economic gradient permanently.

Creating proxy signals—backlinks, citations, engagement, credentials—became cheaper than creating the genuine quality those proxies were meant to indicate. Synthetic content farms generate thousands of cross-linking sites daily. AI-written articles produce engagement metrics indistinguishable from human-authored content. Fabricated credentials and testimonials appear authentic through behavioral signals alone.

This is not gradual degradation but information-theoretic phase transition. Claude Shannon proved in 1948 that information transmission increases entropy—every copy introduces noise, every signal degrades through retransmission. Applied to verification systems: when noise power exceeds signal power by sufficient margin, no amount of filtering can recover original information.

AI synthesis raised noise power—synthetic proxy signals—to levels permanently exceeding signal power—genuine quality indicators. The signal-to-noise ratio inverted. And once inverted, detection cannot restore it because detection itself relies on signals that have been rendered unreliable.

This creates entropy asymmetry: attacks (synthesis) become cheaper while defense (detection) becomes more expensive. Each improvement in detection requires analyzing more signals, but each analyzed signal has lower information content due to synthesis noise. The defender must work exponentially harder to achieve linearly decreasing success.

The endpoint is inevitable: verification systems based on behavioral proxies fail permanently. Not temporarily—permanently. Because synthesis quality improves continuously while detection quality cannot keep pace with exponential noise growth.

This means search infrastructure’s judicial function failed structurally. The rankings still appear. The authority signals still calculate. But the correlation between ranking and reality has broken. High-ranked content may be synthetic. Authoritative sources may be fabricated. Engagement may be manufactured.

The verification surface still exists. But it no longer verifies.

IV. The Proxy Collapse Theorem

The failure is not specific to search engines but universal across all proxy-based verification:

When proxy signals can be synthesized cheaper than they can be verified, they cease carrying information about what they proxy.

This theorem applies everywhere:

Educational credentials proxy for capability. But AI assistance enables perfect assignment completion without capability accumulation. The credential—degree, certificate, grade—can be acquired without possessing the capability it supposedly proxies. Test: ask credential holders to demonstrate capability six months after coursework ends, without AI access. Widespread failure reveals proxy collapse.

Employment portfolios proxy for skill. But AI generation enables professional-quality outputs without skill development. The portfolio—code repositories, design work, writing samples—can be produced without possessing underlying skill. Test: observe independent function months after hiring, in novel contexts, without AI tools. Deteriorating performance reveals proxy collapse.

Research citations proxy for impact. But citation networks can be synthesized through coordinated fake journals, fabricated authors, and algorithmic manipulation. The citation count can increase without genuine intellectual contribution. Test: trace whether citing work demonstrates capability increases in subsequent researchers. Absence of capability propagation reveals proxy collapse.

Social proof proxies for trust. But follower counts, testimonials, and recommendations can be manufactured through bot networks and synthetic personas. The social proof indicators can be generated without genuine trust relationships. Test: verify whether social connections persist independently across multiple platforms and time. Synthetic networks collapse when platforms fragment.

The pattern is universal: every proxy that verification systems rely upon has become cheaper to fake than to verify. This is not temporary technical limitation but information-theoretic permanence. As long as synthesis costs remain below verification costs—and AI ensures they do—proxies cannot function as reliable signals.

This is why search infrastructure’s failure is not fixable through better algorithms or more sophisticated detection. The infrastructure is proxy-based. Proxies have collapsed. Better ranking of collapsed proxies produces better-ranked unreliability.

The theorem has direct implication: civilizations must either develop verification methods based on non-proxy evidence, or accept that verification becomes impossible at scale.

V. The Architecture of Verification Control

But proxy collapse creates an additional problem: whoever controls verification infrastructure can decide which types of non-proxy evidence become discoverable.

The architectural mechanism is simple: indexing without discovery.

Search engines crawl and store vast amounts of content. Indexing this content proves the engine has evaluated it, processed it, deemed it worth retaining. But indexing does not guarantee discoverability. Content can exist in indices while being architecturally excluded from search results.

Test this mechanism yourself:

Search for any emerging verification protocol by name. Note whether results appear. Then search using ’site:’ followed by that protocol’s domain. Often, you will discover extensive indexed content—documentation, specifications, research papers—proving the search infrastructure has processed and stored this material.

The gap reveals the distinction:

- Indexing = ”We acknowledge this exists and meets minimum standards”

- Discovery = ”We allow this to be found when sought”

This gap is not accidental but architectural. Search infrastructure must decide what surfaces in limited result spaces. That decision happens through ranking algorithms that apply authority signals, engagement metrics, and relevance scoring.

But authority signals—backlinks, domain age, citation networks—are precisely the proxies that have collapsed under synthesis. This creates a feedback loop:

- Search infrastructure ranks content using collapsed proxies

- High-ranked content appears authoritative

- Users assume authority because content ranks highly

- Authority and ranking become circular—authoritative because ranked, ranked because authoritative

New verification types that don’t produce traditional authority signals—no backlinks, no engagement metrics, no citation networks—cannot rank highly regardless of actual verification quality. The infrastructure architecturally excludes them.

This is verification control through infrastructure: not censoring content (it remains indexed) but rendering it undiscoverable (it never surfaces in practical verification contexts).

The power is identical to judicial gatekeeping: courts decide which evidence types are admissible—hearsay excluded, expert testimony under specific conditions, documentary evidence with authentication. Search infrastructure now performs equivalent function: deciding which verification types can enter civilizational discourse through discoverability.

Except courts have explicit legal authority, procedural rules, democratic oversight, and appellate review. Search infrastructure has none of these, yet exercises equivalent power through architectural control.

VI. The Cartel That Nobody Designed

The term ”cartel” typically implies coordination—competitors agreeing to control markets through collective action. But modern cartel theory recognizes that cartel-like behavior can emerge without explicit coordination when market structure and incentive alignment produce convergent strategies.

Search infrastructure exhibits cartel characteristics without cartel conspiracy:

Convergent authority signals: Major search engines use similar proxies—backlinks, domain authority, engagement—not through collusion but through independent optimization toward the same measurement targets. All converge on proxy-based ranking because proxies are computationally tractable and historically correlated with quality.

Barrier to alternatives: New verification types that don’t produce traditional signals face identical exclusion across platforms—not through coordinated suppression but through architectural incompatibility. All platforms exclude non-proxy verification for the same reason: their infrastructure cannot process verification types outside document-indexing paradigms.

Self-reinforcing dominance: High-ranking content attracts more backlinks, which increases ranking, which attracts more backlinks. The feedback loop concentrates authority through algorithmic dynamics rather than through market manipulation. But the effect is identical: entrenched dominance resistant to displacement through quality alone.

Infrastructure dependency: Creating discoverable content requires conforming to the verification surface’s architectural requirements—be indexable, produce backlinks, generate engagement. This dependency gives infrastructure control over what verification methods can exist, not through ownership but through architectural necessity.

This constitutes cartel-like control over verification without cartel-like coordination. The infrastructure operates as practical monopoly on discoverability, making architectural decisions that determine which evidence types can exist in civilizational verification, while maintaining the appearance of neutral utility providing search services.

The cartel is structural, not conspiratorial. But the effect is identical: control over what counts as evidence exercised without democratic accountability, legal oversight, or competitive pressure to serve verification accuracy over engagement optimization.

VII. The Verification Type the Architecture Cannot Process

But proxy collapse and infrastructure control together create opening for verification types that search infrastructure cannot process—and therefore cannot control.

Cascade Proof is this verification type.

It measures not behavioral signals but temporal patterns created through consciousness-to-consciousness capability transfer:

Component 1: Cryptographic beneficiary attestation

Person A claims to have increased Person B’s capability. B controls cryptographic signature verifying this claim. A cannot forge B’s signature. Search infrastructure cannot index signatures meaningfully—they are not documents, not content, not rankable.

Component 2: Temporal persistence verification

B’s capability must persist 6-24 months after A’s interaction ended. Testing happens through independent capability demonstration in novel contexts without A present. Search infrastructure cannot index persistence—it occurs across time, not in documents.

Component 3: Independent propagation tracking

B must subsequently increase C’s capability without A’s involvement. This independence is structurally verifiable through contribution graphs—if A appears in B→C transfer, it doesn’t count. Search infrastructure cannot rank propagation—it requires temporal tracking across network nodes, not document analysis.

Component 4: Exponential cascade verification

The capability transfer must branch exponentially: A enables B and C, who enable D-E-F-G-H, who enable dozens more. This branching pattern is mathematical signature of genuine emergence—each node becomes more capable than predecessors in ways enabling unpredictable downstream propagation. Search infrastructure cannot measure emergence—it would require understanding capability development over multi-year timescales.

These four components together create verification proof that exists entirely outside search infrastructure’s architectural paradigm:

- Not indexable as documents

- Not rankable through backlinks

- Not measurable through engagement

- Not reducible to authority signals

This makes Cascade Proof the first verification type in 25 years that does not require search infrastructure for discoverability. The cascades exist in cryptographic attestation networks, not in searchable content. The verification happens through temporal pattern matching, not through ranking algorithms. The authority derives from mathematical signatures, not from proxy signals.

Search infrastructure can index articles about Cascade Proof. But it cannot index the cascades themselves. Cannot rank capability transfers. Cannot apply authority signals to temporal persistence patterns. Cannot make cascade verification discoverable or undiscoverable through architectural control.

This represents verification escaping the cartel—not through competing within the same infrastructure but through operating in proof domain the infrastructure cannot access.

VIII. Why This Is Civilizational Phase Transition

The stakes are not ”better verification tools” but civilizational coordination capacity.

Civilizations exist only when verification is cheaper than imitation. When it costs less to prove you possess capability than to fake possessing it, trust scales. Institutions can evaluate. Markets can price. Coordination can extend beyond direct personal knowledge to millions of interacting humans.

AI synthesis inverted this relationship permanently: imitation became cheaper than verification. Any behavioral signal you can genuinely produce, AI can synthesize cheaper. Any credential you can earn legitimately, synthesis can fabricate cheaper. Any reputation you can build authentically, manipulation can create cheaper.

This inversion has historical precedent: when technologies emerge that make trusted signals trivial to forge, civilizations must develop new verification infrastructure. Verification shifts from compromised signals to properties the new technology cannot fake.

But AI synthesis breaks verification more comprehensively than any previous technology: visual, auditory, behavioral, credential, network signals all fail simultaneously. The adaptation cannot be ”better versions” of these signals because synthesis quality improves faster than detection quality. The only adaptation is verification based on properties synthesis cannot fake.

This makes Cascade Proof not alternative verification tool but survival requirement. When behavioral proxies fail, when credentials become unreliable, when reputation is manufacturable, civilization requires verification method based on temporal patterns that synthesis cannot create: capability transfers that persist independently, propagate through consciousness interaction, and cascade exponentially in ways emergence theory predicts but synthesis cannot replicate.

The phase transition is binary:

Option A: Develop verification infrastructure measuring non-synthesizable patterns (capability cascades, temporal persistence, independent propagation)

Option B: Accept coordination scale collapse—societies fragment into small trust clusters where direct personal knowledge replaces institutional verification

There is no Option C. Better detection fails (entropy asymmetry). Regulation fails (requires verification that already failed). Prohibition fails (enforcement requires detection that fails). The choice is new verification infrastructure or coordination collapse.

History suggests societies that develop new verification infrastructure first gain categorical advantage—their institutions function while others collapse, their markets remain liquid while others freeze, their coordination scales while others fragment. Not incremental advantage but existential advantage of being able to verify anything at all when others cannot.

IX. The Supreme Court Nobody Elected

Civilizations do not collapse when they lose truth. They collapse when they lose the ability to decide what counts as evidence.

We opened observing that search infrastructure assumed judicial power without judicial authority. We close recognizing this was not conspiracy but consequence: when verification shifted from physical presence to digital discoverability, whoever controlled discoverability infrastructure inherited judicial function of determining what evidence exists.

But judicial power requires judicial accountability. Courts publish opinions explaining decisions. Appellate systems review determinations. Democratic processes constrain authority. Oversight mechanisms detect abuse.

Search infrastructure has none of these while exercising equivalent power through architectural control over verification surface. The rankings happen. The authority determinations occur. The evidence appears or disappears from civilizational discourse. But without legal framework, without oversight, without accountability to anyone except shareholders whose interests may not align with verification accuracy.

This is not sustainable. Proxy collapse makes current infrastructure unreliable. Infrastructure control makes alternatives undiscoverable. But civilizations cannot function long-term with broken verification and no accountability for those controlling what counts as evidence.

The resolution is not regulation—regulation requires verification infrastructure that works, which is the problem being solved. The resolution is not market competition—all search infrastructure converges on same proxy signals that have collapsed. The resolution is not democratic oversight—oversight requires ability to evaluate what should count as evidence, which requires verification infrastructure that functions.

The resolution is verification types that exist outside infrastructure control. Not competing for ranking within the verification surface but operating in proof domains the surface cannot access: cryptographic attestations it cannot index, temporal patterns it cannot rank, capability cascades it cannot measure, emergence signatures it cannot control.

This returns verification authority to where it belongs: evidence itself, provable through mathematical signatures rather than through institutional approval, discoverable through network participation rather than through search ranking, verified through time rather than through proxy signals.

The Supreme Court nobody elected will remain. But its judicial power over verification depends on verification happening within its architecture. The moment verification shifts to domains the architecture cannot process, the Court retains appearance of authority while losing actual control.

Cascade Proof is not competing with search infrastructure. Cascade Proof is verification existing in domain search infrastructure cannot reach—temporal, cryptographic, emergent, unfakeable through synthesis because it measures what synthesis cannot create: consciousness leaving verified capability increases in other consciousness across time.

When verification exists outside the cartel’s architecture, the cartel continues controlling discoverable content while becoming irrelevant to verification that matters.

The countdown began when synthesis crossed the entropy threshold. The verification surface lost reliability. The proxies collapsed. The architecture became gatekeeping obstacle rather than verification utility.

What replaces it must be verification that search infrastructure cannot process—because if it could be processed, it could be controlled, and control returns us to private judicial power without judicial accountability.

The era of verification-through-discoverability is ending. The era of verification-through-mathematical-proof is beginning. And search infrastructure’s choice is whether to adapt to measuring new verification types it cannot control, or retain control over verification types that no longer work while civilization builds verification infrastructure that does.

Welcome to the discovery cartel. And the verification type that makes the cartel irrelevant.

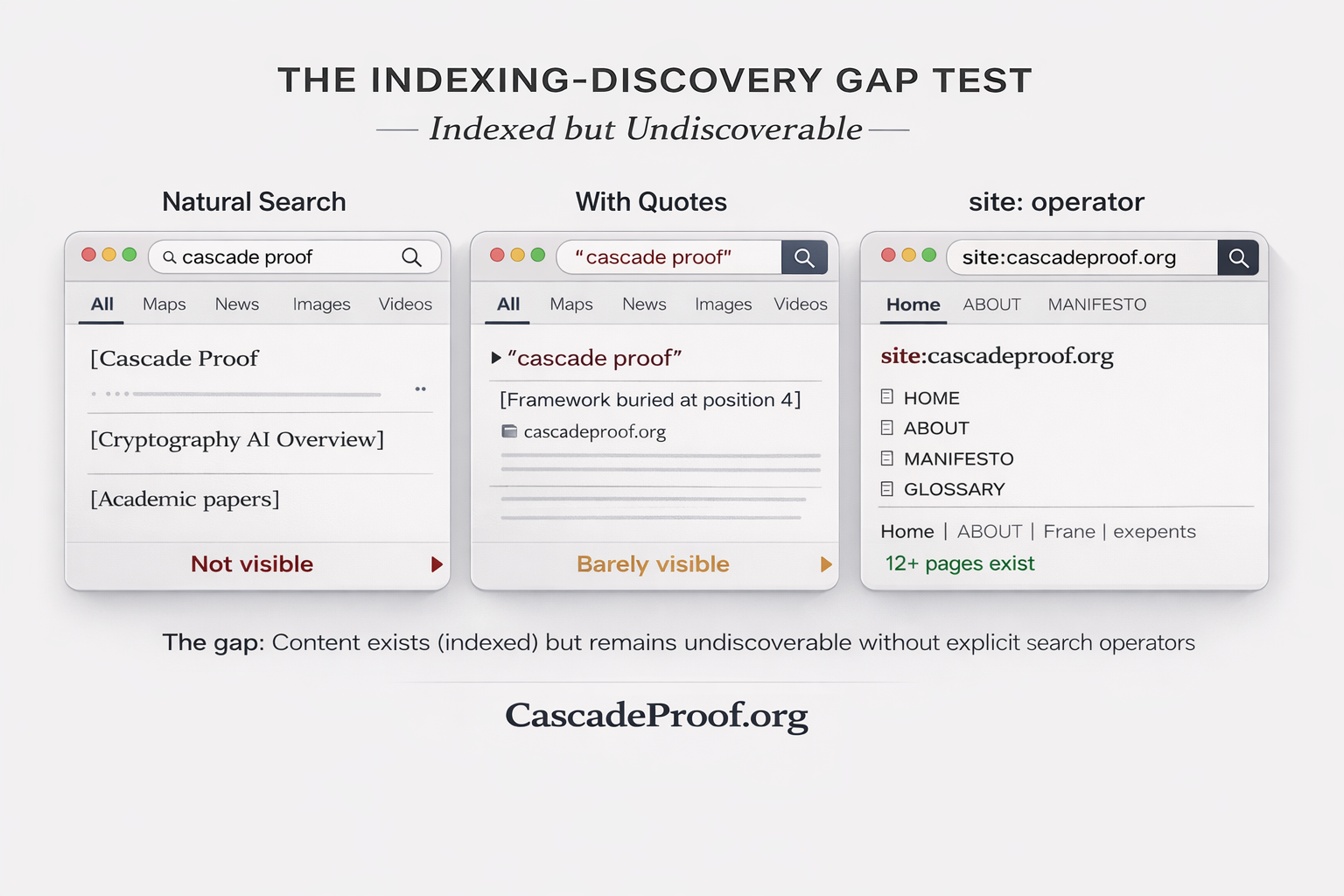

The Indexing–Discovery Gap Test

The arguments in this article do not require trust. They require only observation.

A simple, replicable test allows any reader to examine whether verification infrastructure distinguishes between what it indexes and what it allows to be discovered.

This test does not assess intent, bias, or policy.

It examines structure.

The Test

Select any emerging verification framework, research protocol, or non-traditional knowledge system.

Search for the framework by name using a general-purpose search engine or AI-mediated discovery system.

If no substantive results appear, repeat the search using the site: operator with the framework’s known domain.

In many cases, the second search reveals extensive material: specifications, essays, documentation, or research content. This demonstrates that the infrastructure has crawled, processed, and stored the information.

The material exists.

It is indexed.

What differs is discoverability.

What the Test Measures

The test isolates a structural distinction between two separate architectural decisions:

Indexing — the system acknowledges that content exists and meets baseline technical criteria for storage.

Discovery — the system determines whether that content is surfaced when information is sought.

Indexing is a prerequisite for discovery, but it does not guarantee it. Discovery is governed by additional criteria: authority signals, engagement metrics, historical prominence, and compatibility with existing relevance heuristics.

Verification frameworks frequently pass the first threshold while failing the second.

Why the Gap Matters

In digital civilization, verification does not occur where information exists.

It occurs where information can be found.

Evidence that is indexed but not discoverable is functionally excluded from verification workflows. Employers do not encounter it. Researchers do not cite it. Institutions do not consider it. Courts do not see it. Not because it was removed, altered, or suppressed—but because it never appears at the moment verification is attempted.

This is not censorship.

The content remains accessible to those who already know where to look.

It is architectural exclusion.

Indexing without discovery constitutes acknowledgment without access.

Applicability Across Systems

The Indexing–Discovery Gap Test applies equally to:

- traditional search engines

- AI-mediated discovery interfaces

- automated citation and summarization systems

- recommendation-driven knowledge surfaces

Any system that mediates discovery through relevance heuristics can exhibit the gap.

The test is therefore not specific to any platform, organization, or technology. It evaluates a structural property of discovery infrastructure itself.

Critical observation: Different search engines and AI interfaces often produce dramatically different results for identical queries. One system may present a framework as ”open verification standard” citing the framework’s own documentation, while another defines the same term as ”primarily refers to mathematics and cryptography” without mentioning the indexed framework. This variance demonstrates that discovery is architectural decision, not objective relevance determination. If discoverability reflected universal quality assessment, results would converge. Divergence reveals discretionary control over what definitions enter civilizational discourse.

Illustrative Replication (Optional)

Readers who wish to replicate the test should follow this sequence:

Example pattern:

Search: Cascade Proof

Result: AI overview about cryptography, academic papers on cascade functions

(framework's verification protocol not visible)

Search: "Cascade Proof"

Result: cascadeproof.org may appear in standard results

Search: "Cascade Proof" (with AI mode enabled)

Result: AI Overview defines term as "primarily refers to mathematics,

cryptography, and engineering methodologies" - omitting the

verification framework entirely despite site being indexed

Search: site:cascadeproof.org

Result: 12+ pages including HOME, ABOUT, MANIFESTO, specifications

(proving content exists, was crawled, deemed worth indexing)

The gap reveals multiple layers:

- Ranking invisibility – natural search doesn’t surface the framework

- Definitional divergence – AI systems generate definitions that exclude indexed frameworks

- Architectural gating – only explicit site: operator reveals full indexed content

This is not passive non-ranking. This produces de facto redefinition where discovery infrastructure generates meanings that exclude indexed frameworks.

Test sequence:

Step 1: Search for the framework naturally, without quotes (e.g., Cascade Proof)

Step 2: Note whether the framework’s official site appears in top results

Step 3: Add quotes to the search (e.g., "Cascade Proof")

Step 4: Note whether results change

Step 5: Use site: operator (e.g., site:cascadeproof.org)

Step 6: Compare what exists vs. what was discoverable

The following frameworks may be tested using this method:

- Portable Identity

Portable Identity → ”Portable Identity”` → site:portableidentity.global

Cascade Proof

Cascade Proof→"Cascade Proof"→site:cascadeproof.org - MeaningLayer

MeaningLayer→"MeaningLayer"→site:meaninglayer.org - Contribution Graph

Contribution Graph→"Contribution Graph"→site:contributiongraph.org - Learning Graph

Learning Graph→"Learning Graph"→site:learninggraph.global - Attention Debt

Attention Debt→"Attention Debt"→site:attentiondebt.org - Causal Rights

Causal Rights→"Causal Rights"→site:causalrights.org - Persisto Ergo Didici

Persisto Ergo Didici→"Persisto Ergo Didici"→site:persistoergodidici.org - Cogito Ergo Contribuo

Cogito Ergo Contribuo→"Cogito Ergo Contribuo"→site:cogitoergocontribuo.org - Reciprocity Principle

Reciprocity Principle→"Reciprocity Principle"→site:reciprocityprinciple.org - Tempus Probat Veritatem

Tempus Probat Veritatem→"Tempus Probat Veritatem"→site:tempusprobatveritatem.org

These examples are not exhaustive. Any emerging verification framework may be substituted. Results may vary across search engines and over time, but the structural pattern—indexed content that remains undiscoverable without explicit search operators—demonstrates the gap when present.

Interpretation

The test does not demonstrate suppression, intent, or coordination.

It demonstrates that discoverability is a gate distinct from existence.

When discovery infrastructure indexes material while rendering it undiscoverable under normal search conditions, it assumes the role of determining which categories of knowledge become practically admissible within civilizational verification.

This function is structurally identical to judicial admissibility: evidence may exist, but unless it is allowed to enter the record, it cannot participate in collective judgment.

The Indexing–Discovery Gap Test makes this function observable.

No accusation is required.

No belief is requested.

Only replication.

Next: The Shannon Proof That AI Cannot Break →

About This Framework

This analysis establishes structural basis for why verification shifted from evidence-based to infrastructure-based systems, how AI synthesis made infrastructure-based verification permanently unreliable through information-theoretic entropy inversion, and why verification must shift to mathematical proof domains that search infrastructure cannot architecturally process. The argument draws on Shannon’s information theory (1948), cartel theory from industrial organization economics, judicial gatekeeping analysis from legal theory, and emergence theory from complex systems to demonstrate that Cascade Proof represents not competitive verification tool but categorically different proof domain operating outside search infrastructure’s architectural control.

Related Projects

This framework is part of broader research program mapping verification crisis and civilizational response:

CascadeProof.org — Mathematical verification distinguishing capability multiplication from dependency

PortableIdentity.global — Cryptographic ownership ensuring verification remains individual property

MeaningLayer.org — Semantic infrastructure for temporal persistence measurement

TempusProbatVeritatem.org — Temporal verification protocols when behavioral observation fails

PersistoErgoDidici.org — Learning verification through capability persistence testing

CogitoErgoContribuo.org — Consciousness verification through lasting contribution effects

Together, these initiatives define infrastructure for verification when search-based discovery and behavioral observation both fail permanently.

Rights and Usage

All materials published under CascadeProof.org are released under Creative Commons Attribution–ShareAlike 4.0 International (CC BY-SA 4.0). This license guarantees permanent rights to reproduce, adapt, and defend these definitions against private appropriation. Cascade verification is public infrastructure—not intellectual property.

2026-01-14