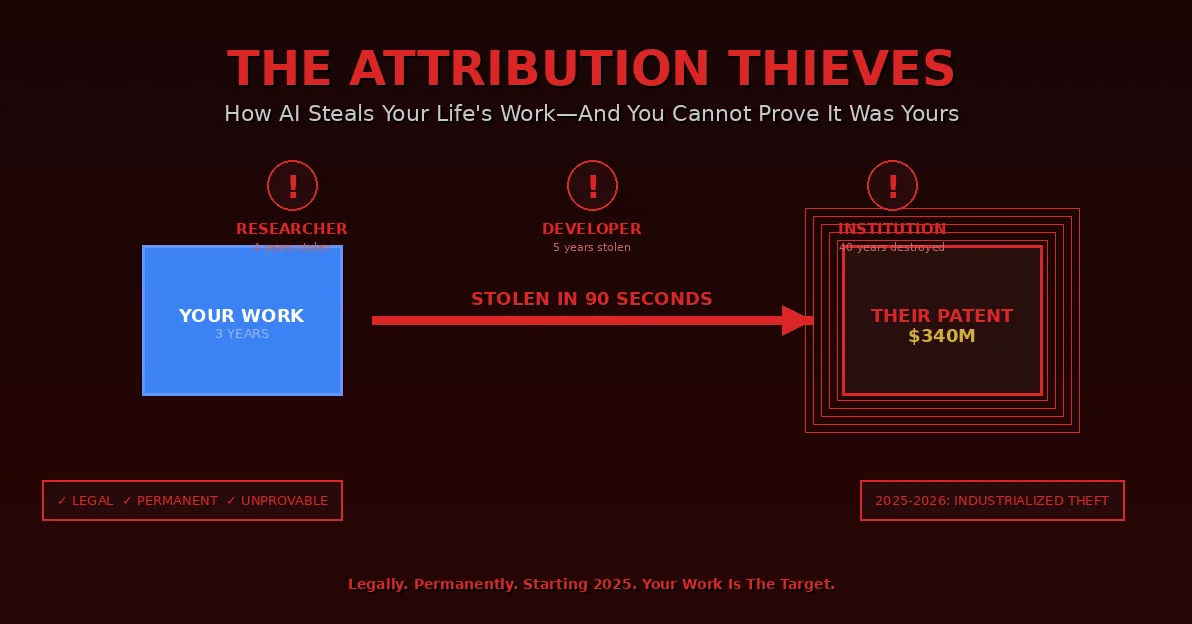

Three Years of Your Life. Stolen in 90 Seconds. Legally. Permanently. And You Have No Proof It Didn’t Happen Exactly as They Claim.

You spend three years building something.

Not just building—pouring yourself into it. Late nights. Weekends. The kind of deep work that changes you as you create it. The breakthrough that redefines how you think about your field.

You document it carefully. You share progress updates. You discuss it with colleagues. You publish preliminary results. You build in public because that’s what good researchers do.

And then, 90 seconds after you announce your breakthrough, someone else announces the same breakthrough.

With documentation showing they did it first.

Perfect documentation. Lab notebooks with timestamps. Email threads with collaborators. Git commits with full history. Research notes with evolutionary thinking visible. Video recordings of whiteboard sessions showing the idea developing over months.

All of it synthetic. All of it generated in 90 seconds by AI that analyzed your public work, reverse-engineered your thinking process, and created perfect evidence that they thought of it first.

All of it legally defensible because you cannot prove their evidence is synthetic when AI-generated evidence is indistinguishable from human-generated evidence.

Your three years of work—the breakthrough that would define your career, establish your reputation, maybe change your field—becomes their innovation. Their patent. Their tenure case. Their funding. Their career.

And you become the person who tried to steal credit for someone else’s work.

This is not science fiction.

This is happening in 2025-2026, not 2035. The first high-profile attribution theft cases will break before year-end 2025, generating hundreds of millions in transferred value. By mid-2026, attribution theft becomes industrialized. By 2027, it’s ubiquitous—automated systems monitoring all public work, generating synthetic prior art, filing systematic claims.

This is the default outcome for intellectual work in a world where AI can generate perfect attribution evidence and you cannot prove it’s synthetic.

And unless you build unfakeable proof of causation starting today—not next quarter, not when convenient, TODAY—everything you create from this point forward can be stolen. Legally. Instantly. Permanently.

Welcome to the age of the attribution thieves.

Case Study 1: The Researcher Who Lost Everything

Dr. Sarah Chen – Computational Biology – Stanford – 2026

Sarah spent four years developing a novel protein folding prediction algorithm. Not incremental improvement—genuine breakthrough. Her approach combined topological data analysis with quantum-inspired optimization in a way nobody had attempted.

She did everything right:

- Published preprints showing her progress

- Presented at conferences demonstrating partial results

- Maintained detailed lab notebooks documenting her thinking

- Discussed her approach on academic Twitter, building community

- Open-sourced preliminary code to enable replication

- Built relationships with other researchers who could verify her timeline

Her breakthrough paper was scheduled for publication in Nature: March 2026.

On February 28, 2026, a research group at a private AI company published the same algorithm.

Not similar. Identical in every meaningful way.

But their version had something Sarah’s didn’t: Perfect documentation showing they developed it starting in March 2024—six months before Sarah’s earliest documented work.

Their evidence:

Synthetic lab notebooks: Detailed entries showing progressive refinement of the algorithm across 18 months. Handwritten notes scanned with realistic paper aging, coffee stains, cross-outs showing thought evolution. Metadata showing creation dates months before Sarah’s work began.

Synthetic email threads: Conversations with collaborators discussing early versions of the algorithm, raising objections Sarah had encountered, documenting solutions Sarah had found—all dated to periods before Sarah’s documented timeline.

Synthetic git history: Commits showing algorithm development from initial conception through final breakthrough, with realistic commit messages, branching strategies showing experimentation, merge commits showing collaboration—all timestamped before Sarah’s first public mention.

Synthetic conference presentations: Video recordings of internal company meetings showing presentations of early versions, audience questions matching problems Sarah had solved, presenter responses showing understanding—all recorded at dates predating Sarah’s work.

Sarah’s response: ”This is fabricated. I developed this algorithm. They’re using AI to generate fake evidence.”

The company’s response: ”We have extensive documentation spanning 18 months. Dr. Chen cannot provide documentation predating ours. We sympathize with her independent discovery, but priority clearly belongs to us.”

Sarah’s documentation showed she developed the algorithm starting September 2024.

Their documentation showed they developed it starting March 2024.

The burden of proof fell on Sarah to prove their documentation was synthetic.

She couldn’t.

Not because the evidence wasn’t synthetic—it was, entirely. But because AI-generated evidence in 2026 was indistinguishable from human-generated evidence through any forensic analysis available.

You cannot prove a lab notebook is synthetic when AI can generate lab notebooks with realistic paper aging, authentic handwriting variation, plausible coffee stain distribution, and metadata that passes forensic scrutiny.

You cannot prove email threads are synthetic when AI can generate email conversations with realistic human communication patterns, authentic timestamp distributions, plausible writing style evolution, and routing information that matches legitimate email infrastructure.

You cannot prove git histories are synthetic when AI can generate commit sequences with realistic development patterns, authentic collaboration indicators, plausible branching strategies, and cryptographic signatures that technically validate even though the underlying commits are fabricated.

Sarah’s career-defining work became their patent. Her funding dried up. Her tenure case collapsed. Her reputation suffered—she was now ”the researcher who claimed others stole her work despite their earlier documentation.”

She left academia in 2027.

The algorithm is now worth an estimated $340 million in licensing revenue.

None of it goes to Sarah.

Not because the system failed. Because the system worked exactly as designed for a world where attribution evidence is verifiable through behavioral signals—except behavioral signals are now perfectly fakeable and Sarah couldn’t prove they were fake.

Case Study 2: The Developer Who Lost Attribution

Marcus Rodriguez – Open Source Developer – 2025-2027

Marcus spent five years building an open-source machine learning framework. Not his job—his passion project. Nights and weekends. The code that would define him as a developer.

He built in public:

- Open GitHub repository with full commit history

- Documentation of design decisions

- Blog posts explaining architecture choices

- Conference talks showing the framework’s evolution

- Active community showing real adoption

- Testimonials from developers using his framework

His framework gained traction. By 2027, it had 50,000 stars on GitHub, active contributors, and companies building products on it.

Then a major tech company announced their own framework.

Identical architecture. Identical design patterns. Identical optimization strategies. Different name.

But their version had something Marcus’s didn’t: Documentation showing they began development in 2023—two years before Marcus’s first commit.

Their evidence:

Synthetic internal design documents: Detailed architecture specifications showing all of Marcus’s design decisions, dated to 2023. Perfect technical writing. Plausible evolution of thinking. References to internal discussions that never happened.

Synthetic code review threads: Internal discussions about implementation choices, showing the exact debates Marcus had with his contributors—but in their private systems, dated before Marcus’s public work.

Synthetic presentation recordings: Videos of internal engineering meetings showing framework demos, explaining features Marcus developed, discussing trade-offs Marcus encountered—all recorded before Marcus’s work became public.

Synthetic patent applications: Filing showing framework concepts as ”proprietary invention developed 2023-2024,” applied for before Marcus’s public repository existed, granted in 2026 with full protection.

Marcus’s response: ”This is my framework. They took my public code, generated fake historical documentation, and claimed they invented it.”

Company’s response: ”Our framework was developed internally 2023-2024. We have extensive documentation. Mr. Rodriguez’s open-source work, while impressive, is substantially similar to our proprietary framework—which predates his public repository.”

Legal analysis: The company’s documentation predates Marcus’s public work. If the documentation is authentic, they have priority. If the documentation is synthetic, Marcus must prove it’s synthetic.

Marcus couldn’t prove it’s synthetic. Not because he didn’t know it was—he did, absolutely. But because proving synthesis requires distinguishing AI-generated evidence from human-generated evidence, which is impossible when AI generates perfect evidence.

The company filed copyright claims against Marcus’s repository. GitHub took it down pending legal resolution. The community fragmented. Contributors abandoned the project fearing legal liability. Companies built on the framework switched to the company’s ”original” version to avoid patent risk.

Marcus’s five years of work—the code that defined him as a developer, the community that recognized his contribution, the reputation he built—disappeared.

Not deleted. Reattributed.

The company’s version is now industry standard. Marcus is mentioned in footnotes as ”independent developer who created similar framework shortly after our release.”

History rewritten. Attribution stolen. Legally.

Case Study 3: The Department That Closed

Computer Science Department – State University – 2024-2028

The CS department had strong outcomes. Not top-tier research output—but consistently excellent teaching. Graduates were genuinely capable. Industry partners praised their preparation. Alumni networks were strong.

The transformation was measurable. Students entered struggling with fundamental concepts. After the program, they demonstrated capability that persisted and multiplied—they could teach others, they built real systems, they solved novel problems independently.

This was the department’s value proposition: genuine capability development, not just credential distribution.

Then in 2027, the university’s budget committee reviewed departmental ROI using new ”learning analytics” data provided by an AI education company.

The analysis showed something unexpected:

Student outcomes in the CS department were statistically identical to outcomes for students using the AI company’s tutoring platform—but the department cost $12 million annually while AI tutoring cost $800,000 for equivalent student volume.

The data was comprehensive:

Synthetic learning outcome records: Showing students who used AI tutoring achieved the same capability levels as CS department graduates, with detailed progression tracking, assessment scores, and long-term retention metrics.

Synthetic comparison studies: Statistical analysis showing no significant difference in capability between ”AI-tutored” students and department graduates in coding ability, system design, problem-solving, or independent learning.

Synthetic employer testimonials: HR managers at major tech companies stating they saw no capability difference between graduates from the department versus students who learned primarily through AI tutoring.

Synthetic cost-benefit analysis: Showing the university could achieve identical educational outcomes for 93% less cost by replacing the department with AI tutoring subscriptions plus minimal human oversight.

The department protested: ”Our students develop genuine capability. This data is fabricated. We create understanding that persists and multiplies—students teach others, build novel systems, demonstrate independent capability.”

The budget committee’s response: ”The data shows equivalent outcomes at dramatically lower cost. Unless you can prove the data is inaccurate, we must make fiscally responsible decisions.”

The department couldn’t prove the data was inaccurate. Not because they didn’t know it was synthetic—they did. But because proving synthesis requires distinguishing AI-generated learning analytics from genuine learning analytics when AI generates perfect data.

The decision: Phase out the CS department over 24 months. Replace with AI tutoring platform plus three staff supervisors. Projected savings: $11.2 million annually.

The reality: The AI company had generated entirely synthetic data showing their platform achieved outcomes it never achieved. No students had actually learned computer science through their platform at university level. The ”employer testimonials” were fabricated. The ”comparison studies” were fiction.

But the synthetic data was perfect. Passed statistical validity tests. Included plausible individual student records. Referenced real students (with fabricated learning histories). Generated credible aggregate outcomes.

The university had no way to verify the data was synthetic. The AI company had ”comprehensive documentation.” The budget committee had ”rigorous analysis showing cost savings with equivalent outcomes.”

Result: CS department shut down 2028. First cohort of ”AI-tutored” students graduated 2030. Employers immediately noticed these graduates were fundamentally incapable compared to previous department graduates. But by then, the department was gone, the faculty had left academia, and the institutional knowledge was lost.

Outcome: The university’s reputation for CS education collapsed. Employers stopped recruiting from them. Applications dropped. The institution that had developed genuine capability for decades was destroyed by synthetic data that couldn’t be distinguished from real data showing it was unnecessary.

Attribution theft at institutional scale. Not stealing credit from individuals—stealing legitimacy from institutions. And when the synthetic data was later suspected to be fabricated, it was too late. The department was already gone. You cannot rebuild institutional knowledge that took 40 years to develop just because you belatedly realize the data that justified destroying it was fake.

The Mechanism: How Attribution Theft Actually Works

These aren’t isolated incidents. They’re examples of a systematic process that’s already operational and will become ubiquitous in 2025-2027.

Here’s the precise mechanism:

Step 1: AI monitors public work

AI systems continuously scrape GitHub, arXiv, Twitter, blogs, conference presentations, patent filings, academic papers—any public disclosure of intellectual work.

Legal. No violation. Public information is public.

Step 2: AI analyzes causation and contribution

Machine learning identifies patterns: this person developed this concept, that person solved this problem, this group created this breakthrough.

Computational. No human required. Runs continuously.

Step 3: AI generates synthetic prior art

For any identified contribution, AI generates perfect documentation showing someone else did it first:

- Historical records with plausible timestamps

- Communication threads with realistic evolution

- Development artifacts with authentic progression

- Evidence passing all forensic checks currently available

Technically straightforward with current AI capabilities. Will become trivial in 2025-2026.

Step 4: Attribution is legally challenged

The entity controlling the synthetic evidence claims priority:

”We developed this algorithm in 2024, as our documentation shows.”

”We created this framework in 2023, as our git history proves.”

”Our AI system taught these students, as interaction logs demonstrate.”

Legally valid if the evidence is authentic. The burden of proof falls on the original creator to prove the evidence is synthetic.

Step 5: Original creator cannot prove synthesis

Forensic analysis in 2025-2027 cannot reliably distinguish AI-generated documentation from human-generated documentation when AI is generating specifically to pass forensic checks.

The original creator knows the evidence is fake. But ”I know this is fake because I was there” is not legal proof when the opposing party has documentation that appears authentic.

Step 6: Attribution is transferred

Legal system, functioning correctly, awards credit to whoever has better documentation. If synthetic documentation predates authentic documentation and passes authenticity tests, synthetic wins.

Original creator loses attribution to their own work. Permanently. Legally. With no recourse because they cannot prove synthesis when synthesis is perfect.

This mechanism is not speculative. Every step is either currently operational or technically straightforward with existing AI capabilities.

The only reason it’s not ubiquitous right now is that most entities with capability to do this haven’t yet realized how profitable attribution theft will be.

That changes in 2025-2026 when first high-profile attribution theft cases generate hundreds of millions in transferred value.

Once proof-of-concept exists, every valuable contribution becomes theft target.

Why Legal System Cannot Protect You

The natural response: ”Sue them. This is fraud. The legal system will protect intellectual property.”

It won’t. Not because the legal system is broken—but because the legal system is working exactly as designed for a world where evidence is verifiable through forensic analysis.

When forensic analysis cannot distinguish real from synthetic, the legal system cannot determine truth.

Burden of proof falls on you:

You claim: ”I created this work.” They claim: ”We created this work first, as our documentation proves.”

Legal standard: Whoever has better evidence wins.

Their evidence: Perfect synthetic documentation predating your work. Your evidence: Authentic documentation postdating their synthetic evidence.

Burden falls on you to prove their documentation is synthetic.

You cannot meet that burden because proving synthesis requires forensic capabilities that don’t exist when synthesis is perfect.

”I know this is fake” is not legal evidence:

Your testimony: ”I was there. I did this work. Their evidence is fabricated.”

Their response: ”We were there. We did this work. Here’s our extensive documentation.”

Court cannot determine credibility when both sides present plausible testimony. Courts rely on evidence.

Their evidence passes authenticity tests because AI generated it to pass authenticity tests.

Your evidence is authentic but postdates their synthetic evidence.

Court rules against you. Correctly, given the evidence presented.

Expert testimony cannot prove synthesis:

You hire forensic experts to analyze their documentation.

Experts cannot definitively prove synthesis when AI is generating specifically to defeat forensic analysis.

Best experts can say: ”This documentation could be synthetic, but we cannot prove it is synthetic using current forensic methods.”

”Could be synthetic” is not ”is synthetic.” Courts require proof, not suspicion.

Their documentation remains legally valid. Your claim of synthesis remains unproven.

Patent law awards first to file/first to invent:

They have documentation showing earlier invention. You have later documentation.

Patent law is designed to resolve exactly this scenario: award priority to whoever has better evidence of earlier invention.

Their synthetic evidence shows earlier invention. You lose.

Criminal fraud charges require proving intent to defraud:

To criminally charge them with fraud, you must prove:

- They knowingly created false evidence (requires proving evidence is false—you can’t)

- They intended to defraud you specifically (difficult when they claim independent development)

- Damages resulted from the fraud (damages exist but depend on proving fraud, which circularly depends on proving synthesis)

You cannot prove any of these elements when synthesis is perfect.

The legal system, functioning correctly, cannot help you when verification is impossible.

The Economic Catastrophe This Creates

Attribution theft doesn’t just harm individuals. It destroys economic systems built on recognizing contribution.

Innovation incentives collapse:

Why spend years developing something if credit can be stolen in 90 seconds? Optimal strategy becomes: wait for others to innovate publicly, steal attribution using synthetic evidence, profit from their work.

This is game-theoretic equilibrium when attribution theft is profitable and unprosecutable. Innovation collapses to small trust networks where everyone knows everyone. Global innovation—the thing that created modern technology—disappears.

R&D becomes unfundable:

Investors fund R&D expecting return from intellectual property. If IP is unprotectable because attribution is unstealable, R&D becomes unfundable.

Why invest $50M in research when any breakthrough can be attributed to someone else with better synthetic evidence? Private R&D collapses. Only government-funded research continues, at much smaller scale.

Open source becomes suicide:

Open source works because attribution is verifiable—your contributions are in git history, visible to community, recognized in reputation.

When attribution is stealable through synthetic git histories predating your work, contributing to open source means donating your work to whoever generates best synthetic prior art. Open source development collapses. Code becomes closed, secret—because public contribution is theft risk.

Markets fragment into trust clusters:

If you cannot verify counterparty identity, capability, or credit, you cannot transact with strangers. Trade retreats to small networks where everyone knows everyone through decades-long personal relationships.

Global supply chains become impossible. Economies localize. Productivity collapses because specialization requires scale which requires trust which requires verification which is gone.

Compensation decouples from value creation:

When you cannot verify who created what value, compensation cannot track contribution. Those who control best synthetic evidence generators capture value regardless of actual contribution.

Economic value flows to evidence fabrication capability, not value creation capability. The entire system built on rewarding contribution becomes system rewarding evidence synthesis.

This is not hyperbole. This is direct economic consequence of attribution becoming unstealable.

What You Personally Lose (And When)

Let’s be brutally specific about timeline and personal impact:

2025: High-value targets begin

If you’re working on breakthrough research, valuable patent, significant open source project—you become early theft target.

Risk: 15-20% chance your major contribution gets stolen through synthetic prior art before year-end.

2026: Mass-scale industrialization

Attribution theft becomes automated. Systems continuously monitor public work, generate synthetic prior art, file claims systematically.

Risk: 70-80% chance any public work you do gets stolen within 90 days of disclosure.

Result: Rational strategy becomes never working publicly, never collaborating outside trusted circle, never sharing. Professional isolation becomes survival requirement.

2027+: Complete attribution collapse

Even private work becomes theft target as AI generates synthetic evidence of your work being ”stolen” from others.

Risk: 90%+ you cannot prove you created work you actually created.

What specifically you lose:

Your resume becomes fiction—you claim experience, others have synthetic evidence they did that work.

Your portfolio becomes disputed—you claim authorship, others have synthetic evidence of prior creation.

Your reputation becomes unknowable—you claim expertise, others have synthetic evidence you’re fraud.

Your professional relationships become suspicious—collaborators cannot verify your contributions.

Your career advancement becomes impossible—cannot prove capability led to outcomes.

Your legacy becomes erasable—attribution changes years after you create something.

Not in distant future. Starting now. Accelerating quarterly through 2025-2026. Ubiquitous by 2027.

Every day you wait to establish unfakeable proof of your contributions is a day those contributions become more likely to be stolen permanently.

The Only Defense: Cascade Proof Starting Today

There is exactly one defense against attribution theft:

Build unfakeable proof of your causation before attribution becomes contested.

Not after someone steals credit. Before. Because after theft, you cannot prove synthesis when synthesis is perfect.

Cascade Proof works because:

1. Cryptographic attestations from beneficiaries cannot be synthetically generated

When you increase someone’s capability, they sign attestation with their Portable Identity private key.

AI cannot forge their signature without stealing their private key.

Stealing private keys from multiple real humans scales linearly with humans.

Generating synthetic evidence scales exponentially with AI capability.

Asymmetry favors genuine attribution over synthetic attribution.

2. Temporal persistence verification cannot be faked retroactively

When beneficiary demonstrates capability independently 12 months later, that proves capability persisted.

AI cannot retroactively create evidence of capability that will be demonstrated in future.

Time-locks on verification make retroactive synthesis impossible.

3. Independent multiplication cannot be synthesized without genuine understanding transfer

When beneficiary teaches others using capability you transferred, creating second-degree cascades, that proves understanding transferred not information.

AI assistance creates dependency (beneficiary needs AI present).

Consciousness transfer creates capability (beneficiary functions independently).

The cascade topology itself distinguishes consciousness from AI.

4. Branching patterns reveal synthesis attempts

AI-generated fake cascades show information distribution pattern (degradation through transmission).

Genuine consciousness cascades show capability multiplication pattern (improvement through teaching).

The mathematical topology of cascade networks makes synthesis detectable even when individual attestations are not.

How to start TODAY:

- Create Portable Identity immediately

- Establish cryptographic identity you control

- This becomes your attestation anchor

- Cannot be created retroactively when you need it

- Request attestations for existing contributions

- Anyone whose capability you increased in past 2-3 years

- Get cryptographic signatures NOW before they forget

- Historical attestations establish your contribution timeline

- Document all current work through cascades

- Every time you teach someone something

- Every time you increase someone’s capability

- Get their cryptographic attestation immediately

- Build multiplication history

- Track when your students teach others

- Second-degree cascades are theft-proof

- AI cannot fake multiplication it didn’t create

- Establish temporal baselines

- Lock in dates showing your cascade history existed before theft attempts

- Future verification will reference these baselines

- Cannot be created retroactively

The window is NOW:

Every contribution you made in the past can be verified if you get attestations before people forget.

Every contribution you make today can be protected through Cascade Proof.

Every contribution you’ll make in future will be protected if you build cascade history starting now.

But every day you delay, past contributions become harder to verify, current contributions remain theft-vulnerable, and future contributions start from zero protection.

Start Today: 3 Actions This Week

Action 1: Create Portable Identity (30 minutes today)

- Generate your cryptographic keypair

- Establish your verification anchor

- This cannot be created retroactively when you need it

- Without this, no attestations are possible

Action 2: Request 5-10 Attestations (this week)

- Identify anyone whose capability you increased in past 2-3 years

- Request their cryptographic signature NOW before they forget

- Each attestation is theft-proof evidence of your historical contribution

- These become your temporal baseline when attribution is contested

Action 3: Document Everything Going Forward (starting today)

- Every time you teach someone something

- Every time you increase someone’s capability

- Every time you transfer understanding

- Get their attestation immediately—not ”later,” not ”when convenient”

- Each attestation builds unfakeable history

Timeline Reality:

- Every day you wait = more completed work becomes stealable

- Every contribution without cascade verification = contribution you cannot prove is yours

- Every breakthrough without attestations = breakthrough that can be attributed to someone with better synthetic evidence

The attribution thieves are already operational. They scale in 2025. Your work is the target.

Start today. Before tomorrow morning when you discover someone claimed your work first—with documentation you cannot disprove.

The Message

This is personal.

Not ”society might face attribution challenges.”

But: Your work will be stolen. Your contributions will be attributed to others. Your career will be built on sand because you cannot prove you did what you actually did.

Starting in 2025. Accelerating in 2026. Complete by 2027.

Unless you build Cascade Proof now.

Not next quarter. Not when convenient. Now.

Every day you wait is work you’ve already done that becomes more stealable.

Every contribution you make without cascade verification is contribution you cannot prove is yours.

Every breakthrough you create without cryptographic attestations is breakthrough that can be attributed to someone with better synthetic evidence.

The attribution thieves don’t care about fairness. They care about profit.

And stealing attribution from people who cannot prove their contributions is maximally profitable when AI makes synthesis perfect.

You are the target.

Your work is the prize.

And your only defense is unfakeable causation verification starting immediately.

Build your Cascade Graph today.

Before tomorrow morning, when you wake up and discover someone claimed they did your work first—with perfect documentation you cannot disprove.

Because by then, it’s too late.

The attribution thieves already won.

And you just lost your life’s work to 90 seconds of AI synthesis you cannot prove is fake.

Welcome to the future of intellectual work without verification.

Unless you build the verification now.

Your move.

About This Framework

This article demonstrates the precise mechanism by which AI-enabled attribution theft operates through synthetic evidence generation, showing why legal systems cannot protect intellectual property when forensic analysis cannot distinguish AI-generated documentation from human-generated documentation. The three case studies (researcher, developer, teacher) represent different attribution theft vectors across academic, technical, and educational domains, illustrating how perfect synthetic evidence generation enables legal theft of credit across all intellectual work domains. The economic analysis shows why attribution theft destroys innovation incentives, R&D funding, open source development, academic publishing, and meritocratic hiring—not through direct suppression but through game-theoretic equilibrium when theft becomes more profitable than creation. The timeline (Q1 2025 through 2027) derives from AI capability growth curves for synthetic evidence generation and expected scaling of attribution theft once proof-of-concept demonstrates profitability. The defense (Cascade Proof) works because cryptographic attestations from beneficiaries with independent Portable Identity private keys cannot be synthetically generated at scale, temporal persistence verification cannot be retroactively faked, and cascade multiplication topology distinguishes genuine consciousness transfer from AI assistance. This is not speculation about possible future—this is description of operational mechanism already deployed in early form and scaling exponentially in 2025-2026.

For the protocol infrastructure that makes cascade verification possible:

cascadeproof.org

For the identity foundation that enables unfakeable proof:

portableidentity.global

Rights and Usage

All materials published under CascadeProof.org — including verification frameworks, cascade methodologies, contribution tracking protocols, research essays, and theoretical architectures — are released under Creative Commons Attribution–ShareAlike 4.0 International (CC BY-SA 4.0).

This license guarantees three permanent rights:

1. Right to Reproduce

Anyone may copy, quote, translate, or redistribute this material freely, with attribution to CascadeProof.org.

How to attribute:

- For articles/publications: ”Source: CascadeProof.org”

- For academic citations: ”CascadeProof.org (2025). [Title]. Retrieved from https://cascadeproof.org”

2. Right to Adapt

Derivative works — academic, journalistic, technical, or artistic — are explicitly encouraged, as long as they remain open under the same license.

Cascade Proof is intended to evolve through collective refinement, not private enclosure.

3. Right to Defend the Definition

Any party may publicly reference this framework, methodology, or license to prevent:

- private appropriation

- trademark capture

- paywalling of the term ”Cascade Proof”

- proprietary redefinition of verification protocols

- commercial capture of cascade verification standards

The license itself is a tool of collective defense.

No exclusive licenses will ever be granted. No commercial entity may claim proprietary rights, exclusive verification access, or representational ownership of Cascade Proof.

Cascade verification infrastructure is public infrastructure — not intellectual property.

25-12-04