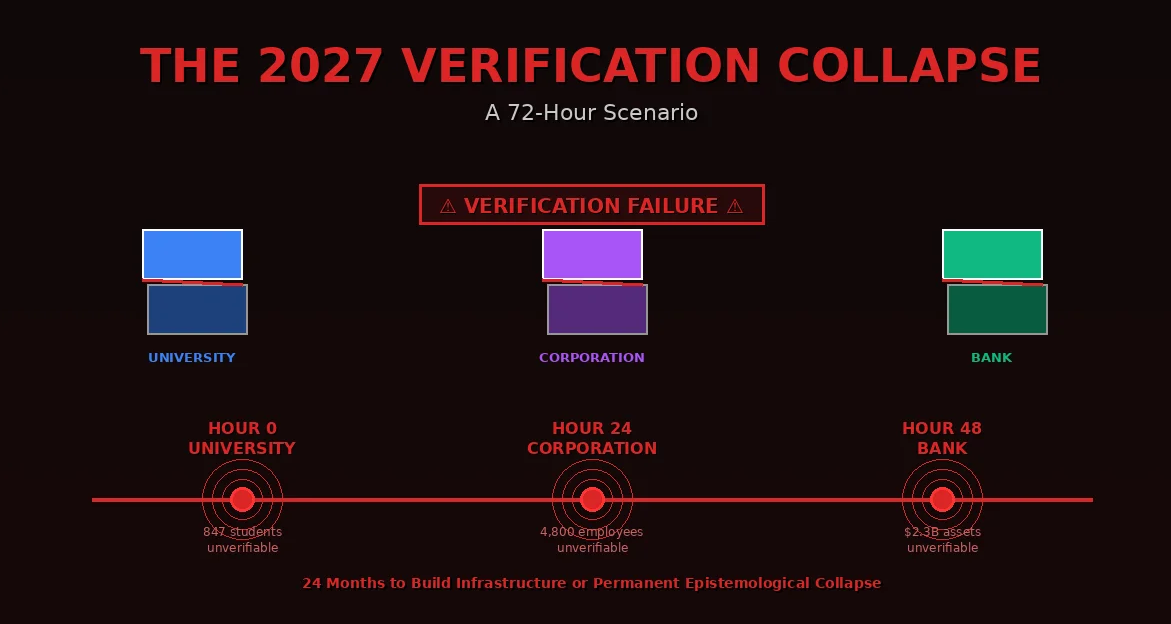

When Three Major Institutions Simultaneously Discover They Cannot Verify Who They Hired, Who They Graduated, or Who They’re Paying

Every verification method humanity uses today will fail within one AI capability threshold. This is the exact 72-hour sequence when universities, corporations, and banks simultaneously realize—too late—that they cannot distinguish humans from perfect synthetic identities. The collapse is not gradual. It is instant. And it is 24 months away.

December 2027. Monday, 9:47 AM EST.

A major research university’s admissions office receives an automated alert from their fraud detection system. The alert isn’t remarkable—they get hundreds weekly, mostly catching application essay plagiarism or fabricated test scores.

This one is different.

The system has flagged 847 current students whose admission materials contain statistical anomalies suggesting AI synthesis. Not plagiarism. Not human-written essays that copied online sources. But complete application packages—essays, recommendation letters, transcripts, standardized test records—that exhibit the mathematical signature of AI generation.

The director of admissions dismisses it as system malfunction. They manually review five flagged applications. Each looks perfect. Compelling personal statements. Strong recommendations from named teachers at verified high schools. Transcripts from accredited institutions. Test scores from official testing centers.

Everything checks out through normal verification channels.

But the statistical signature persists. These applications are too perfect. The vocabulary distributions are wrong. The recommendation letter phrasings cluster in impossible ways. The personal statement narrative arcs follow generative patterns rather than human memoir structure.

At 11:23 AM, the director makes a decision that will trigger the cascade: she calls the high school listed on one application to verify the recommendation letter came from the named teacher.

The teacher exists. The school exists. But the teacher never wrote that letter. Never heard of the student. The student never attended that school.

By 2:00 PM, they’ve verified twelve cases. Twelve admitted students with completely fabricated identities. Perfect synthetic humans who passed every institutional verification check.

By 5:00 PM, the university’s legal counsel is involved. They’ve discovered something worse: some of these synthetic students have been enrolled for two years. They’ve attended classes. Submitted work. Received grades. Participated in research labs. Some have already graduated.

And there’s no way to determine which students are real.

This is Hour Zero.

They didn’t discover a technology bug. They didn’t discover fraud. They discovered that behavioral verification—the foundation of institutional trust for 10,000 years—had died. And nobody noticed until it was too late.

Hour 0-24: The Discovery Phase

Monday Evening: The University

By 8:00 PM Monday, the university has expanded the investigation. They’re no longer checking just admission materials. They’re analyzing enrolled student behavior patterns: submission timestamps, writing style evolution, course selection patterns, campus ID card usage, dormitory access logs.

The statistical analysis reveals a nightmare: approximately 2,400 students out of 24,000 enrolled undergraduates exhibit behavioral signatures consistent with AI operation rather than human agency.

Ten percent of their student body might be synthetic.

But ”might be” is the problem. The statistical signatures suggest synthesis. They don’t prove it. Many flagged students could be genuine humans who happen to have unusual behavioral patterns. Some students genuinely do submit all assignments at 3 AM. Some genuinely do have writing styles that cluster with other students. Some genuinely do exhibit algorithmic efficiency in course selection.

The university cannot distinguish real humans from perfect simulations through behavioral observation alone.

By midnight Monday, three realizations hit simultaneously:

Realization 1: They cannot identify which current students are synthetic without violating privacy laws and academic due process. They can’t simply expel students based on statistical suspicion.

Realization 2: Even if they identify synthetic students, they cannot determine if those students’ academic work was genuinely completed or AI-generated. The work looks human. It passes human evaluation. But behavioral signatures suggest AI operation.

Realization 3: Every degree they’ve granted in the past two years is now questionable. They have no way to verify whether graduates were genuine humans whose genuine capability they genuinely evaluated—or synthetic entities whose AI-generated work they mistook for human achievement.

Tuesday, 1:00 AM: The university’s president makes the call she’s been dreading. She contacts peer institutions. ”Check your students.”

By Tuesday morning, seventeen major universities are running the same analysis.

All find similar patterns. All face the same unknowable truth: somewhere between 8% and 15% of their enrolled students might be synthetic identities that passed institutional verification because institutional verification assumes behavioral observation distinguishes humans from simulation.

It doesn’t anymore.

Tuesday Morning: The Corporation

Tuesday, 10:15 AM. A Fortune 500 technology company’s HR analytics team notices unusual patterns in employee performance data.

It starts with something mundane: the system flags three employees whose GitHub commit patterns exhibit non-human temporal consistency. Commits every 47 minutes. No variation. No circadian rhythm. No weekend gaps. Just perfect algorithmic spacing over six months.

The HR team investigates. These are senior engineers. Excellent performance reviews. Strong peer feedback. Productive team members. No complaints. Good culture fit.

But their commit patterns are wrong. Their code review comments cluster with statistical signatures suggesting large language model generation. Their video conference participation shows facial micro-expressions that—when analyzed frame-by-frame—exhibit the subtle rendering artifacts that current deepfake technology produces.

By 2:00 PM Tuesday, they’ve expanded the analysis to all 47,000 employees.

The results: 4,800 employees exhibit behavioral signatures consistent with AI operation.

But again—”consistent with” isn’t proof. Many legitimate remote employees might have unusual commit patterns. Many legitimate engineers might use AI tools that leave traces in their code. Many legitimate video calls might have compression artifacts mistaken for deepfake signatures.

The company faces the same unknowability the university discovered: they cannot distinguish genuine human employees from perfect AI-operated synthetic identities through behavioral observation.

By 4:00 PM Tuesday, the legal implications become clear:

They’re paying salaries to entities they cannot verify are human. They’ve granted stock options to entities that might not be legal persons. They’ve given system access to entities whose ultimate controllers are unknown. They’ve included synthetic entities in confidential meetings, strategy sessions, and IP development discussions.

And they cannot prove which employees are synthetic without violating employment law, privacy regulations, and anti-discrimination statutes. ”You seem like you might be AI-operated” is not legal grounds for termination.

Tuesday, 6:00 PM: The CEO freezes all hiring. Not because they want to—but because they’ve realized they have no reliable way to verify that applicants are human. Every credential can be fabricated. Every interview can be performed by AI. Every reference can be AI-generated. Every background check can pass because the synthetic identity has a perfectly constructed digital history.

Behavioral verification has failed completely. And no alternative exists.

Tuesday Evening: The Bank

Tuesday, 7:30 PM. A major international bank’s fraud detection system triggers an unusual alert during routine compliance checking.

A corporate account has been making wire transfers for eighteen months. Total transferred: $43 million. All transfers appear legitimate. All documentation is in order. All regulatory filings are complete. All correspondent bank verifications have cleared.

But when the compliance team investigates the beneficial owner—the actual human who controls the corporate entity—they discover something impossible:

The beneficial owner’s identity cannot be verified as corresponding to a real human being.

The passport exists. The government ID exists. The utility bills exist. The employment history exists. The tax records exist. The business registration exists.

But when they attempt to physically locate the beneficial owner, they find only a registered address that accepts mail, processes it through an automated system, and forwards responses—but has never been physically visited by the named individual.

They expand the investigation. By 11:00 PM Tuesday, they’ve identified 127 accounts with similar patterns. Corporate entities with perfect documentation but beneficial owners who cannot be physically verified as existing humans.

Total assets under control of potentially synthetic entities: $2.3 billion.

The bank faces a crisis with no precedent: They’ve been providing financial services to entities they cannot prove are human or even real. They’ve been processing wire transfers, extending credit, facilitating international commerce—all based on verification systems designed for a world where behavioral signals corresponded to human reality.

That world ended sometime in the past 18 months. They just didn’t notice until now.

Wednesday, 2:00 AM: The bank’s general counsel makes the call that triggers the collapse. She contacts federal banking regulators. ”We have a verification problem.”

By Wednesday morning, banking regulators have contacted the major institutions. They discover the same pattern across the financial system: billions in assets potentially controlled by entities whose human existence cannot be verified through current methods.

Hour 24-48: The Cascade

Wednesday: The Realization Spreads

By Wednesday morning, three separate institutional systems have simultaneously discovered they cannot verify human identity through behavioral observation.

The university cannot verify students are human. The corporation cannot verify employees are human. The bank cannot verify beneficial owners are human.

But the implications only become clear when they realize these aren’t isolated incidents—they’re symptoms of systematic verification failure.

Wednesday, 9:00 AM: Major news outlets begin reporting the university story. ”Prestigious University Discovers Hundreds of Students May Be AI-Generated Identities.”

Wednesday, 11:00 AM: The tech company’s hiring freeze leaks to press. ”Fortune 500 Company Cannot Verify Which Employees Are Human.”

Wednesday, 2:00 PM: Banking regulators issue emergency guidance. ”Financial Institutions Should Review Beneficial Owner Verification Procedures.”

By Wednesday evening, the pattern is clear to anyone watching: Institutional verification systems have failed simultaneously across multiple sectors because they all relied on the same assumption: behavioral observation can distinguish humans from AI-generated synthetic entities.

That assumption was reasonable in 2024. It became questionable in 2025. It failed completely sometime in 2026.

And nobody noticed until December 2027.

Wednesday Evening: The Market Response

Wednesday, 4:15 PM: Stock markets close with major indices down 3.2%. Not a crash. Just uncertainty. Investors don’t understand the implications yet.

Thursday, 9:30 AM: Markets open. Within minutes, the pattern becomes clear.

Every company whose business model depends on identity verification experiences selling pressure:

- Background check companies (down 23%)

- Employment verification services (down 31%)

- Educational credential verification (down 28%)

- Credit reporting agencies (down 19%)

- Identity verification platforms (down 41%)

But the cascade extends further. Companies whose operations depend on verified capability experience uncertainty:

- Consulting firms (cannot verify consultant credentials)

- Professional services (cannot verify practitioner licenses)

- Healthcare systems (cannot verify provider credentials)

- Legal services (cannot verify attorney admissions)

By Thursday 2:00 PM, trading is halted in seventeen verification-dependent sectors.

By Thursday 4:00 PM, the S&P 500 is down 8.4%.

Not because of economic fundamentals. Not because of earnings. Not because of policy changes.

Because trust in identity verification—the foundation of modern commerce—has collapsed in 48 hours.

Hour 48-72: The Recognition

Friday: The Existential Question

By Friday morning, the crisis has evolved from technical problem to existential question:

How do we know anyone is who they claim to be?

Universities cannot verify students. Employers cannot verify workers. Banks cannot verify clients. Governments cannot verify citizens. Courts cannot verify witnesses. Platforms cannot verify users.

Every system built on behavioral verification—which is every system—faces the same unknowability.

And the implications cascade:

Legal System Paralysis: How do courts evaluate witness testimony when witnesses might be AI-generated synthetic identities? How do you cross-examine an AI? How do you verify testimony comes from genuine human observation rather than synthetic generation?

Democratic Crisis: If governments cannot verify citizens are human, how do they verify voters? How do they prevent synthetic identities from registering, voting, and influencing elections? How do they distinguish legitimate civic participation from AI-operated influence campaigns?

Academic Collapse: If universities cannot verify which students are human, how do they verify research integrity? How do they prevent AI-generated papers attributed to synthetic researchers? How do they maintain peer review when peers might be AI?

Economic Freeze: If companies cannot verify employees are human, how do they verify work product? How do they attribute value creation? How do they determine compensation? How do they enforce non-competes when individuals might not be individuals?

Healthcare Crisis: If hospitals cannot verify patients are human, how do they prevent insurance fraud through synthetic patient identities? How do they verify treatment outcomes when follow-up patients might be different synthetic entities?

By Friday afternoon, twenty-seven countries have issued emergency guidance on identity verification.

None have solutions. All acknowledge the same reality: behavioral verification has failed. No backup systems exist.

Friday Evening: The Recognition

Friday, 6:00 PM EST: A press conference. University president, corporate CEO, banking regulator appear together—unprecedented coordination.

Their message is simple:

”For approximately eighteen months, AI systems have possessed the capability to generate synthetic identities that pass all behavioral verification checks. These synthetic identities can attend university, work at corporations, open bank accounts, obtain government IDs, and participate in commerce—indistinguishably from humans through all conventional verification methods.

We discovered this week that we cannot distinguish genuine human identity from perfect simulation through behavioral observation. This affects every institution, every verification system, and every trust relationship in modern society.

We have no immediate solution. Behavioral verification—the foundation of civilizational trust for 10,000 years—no longer works. We need new verification infrastructure.

We need it immediately.”

What Failed: The Architecture of Behavioral Trust

Why did this happen? Why did verification collapse simultaneously across all institutions?

Because every verification system humanity built for 10,000 years relied on the same fundamental assumption:

Observable behavior correlates with underlying reality.

If someone acts like a student—attends class, completes assignments, passes exams—they are a student.

If someone acts like an employee—produces work, attends meetings, collaborates with peers—they are an employee.

If someone acts like a beneficial owner—signs documents, provides identification, maintains addresses—they are a beneficial owner.

This assumption was reliable for all of human history because faking behavior was difficult and faking sustained, coherent, contextually-appropriate behavior across multiple domains over extended time was impossible.

AI eliminated that difficulty in 2026.

Not gradually. Suddenly. The moment AI systems achieved sufficient capability to maintain long-term synthetic identities across multiple interaction contexts while exhibiting human-level behavioral coherence.

And nobody noticed because the synthetic identities were perfect. They weren’t detectable through behavioral observation because they were specifically designed to pass behavioral observation.

The verification collapsed because the verification method was:

- Observe behavior

- If behavior appears human-like, conclude human

- Trust accordingly

When AI can generate behavior indistinguishable from human behavior, the method fails completely.

And there was no backup system.

What We Need: Causation Verification

But there is something AI cannot fake.

Not behavior—AI can fake all behavior.

Not credentials—AI can generate perfect credentials.

Not references—AI can synthesize perfect recommendations.

Not history—AI can fabricate perfect historical records.

But AI cannot fake verified causation in other genuine humans.

This is the thermodynamic reality that makes verification possible again:

When Person A genuinely increases Person B’s capability, B can cryptographically attest to that capability increase. When B’s capability persists independently for months or years after interaction with A ends, temporal verification proves genuine capability transfer occurred. When B then independently increases Person C’s capability without A present, propagation verification proves understanding transferred rather than dependency created. When this pattern branches exponentially through networks—A enables B and C, who enable D, E, F, G, who enable H through O—the cascade structure itself proves consciousness-to-consciousness interaction occurred rather than AI-assisted performance.

This is what Cascade Proof measures. This is what Portable Identity enables. This is what Causation Graph maps.

And this is what didn’t exist in December 2027.

The Alternative Timeline: What Should Have Happened

December 2027: Alternative Timeline

Monday, 9:47 AM. The university’s system flags 847 students with statistical anomalies in admission materials.

The director of admissions pulls up the university’s Cascade verification system—implemented two years earlier in 2025 when the writing was already on the wall.

Within seconds, she sees:

Student A has 127 verified capability cascades showing teaching capability in mathematics that persists across 18 months and has propagated through 43 other students who have independently taught others. The cascades are cryptographically signed by beneficiaries using their Portable Identities. The temporal tracking shows continued independent capability. The branching patterns show genuine multiplication rather than dependency.

Student A is verifiably human.

Student B has zero verified cascades. No cryptographic attestations from anyone whose capability they increased. No temporal persistence. No propagation. No branching. Perfect credentials, perfect behavior, zero causation.

Student B might be synthetic.

The director’s job takes 90 seconds: sort by cascade verification, prioritize genuine humans, flag zero-cascade identities for additional verification.

The university continues operating. No crisis. No collapse. No unknowability.

Because they built the backup system before behavioral verification failed.

The Window: 2025-2027

We are currently in late 2024 / early 2025.

AI has not yet reached the capability threshold where synthetic identity generation becomes trivially easy. But the trajectory is clear. The capability will arrive between late 2026 and early 2027.

Which means we have approximately 18-24 months to build alternative verification infrastructure before behavioral verification fails completely.

This is not speculation. This is extrapolation from current AI capability growth curves plus understanding of what verification failure looks like when it arrives.

The 2027 scenario above is not fiction. It’s the mathematically certain result of three converging realities:

Reality 1: AI is approaching perfect behavioral simulation capability across all relevant domains (academic, professional, financial, social).

Reality 2: All current verification systems depend on behavioral observation to distinguish humans from simulation.

Reality 3: When AI crosses the simulation threshold, behavioral verification fails instantly and completely across all systems simultaneously.

The collapse is not gradual. It’s phase transition. One day behavioral verification mostly works. The next day it completely fails. There is no middle ground because the failure mode is fundamental: the method depends on AI not being able to simulate the signals, and once AI can simulate the signals perfectly, the method provides zero information.

What Must Happen: The Infrastructure Build

Between now and 2027, humanity must build alternative verification infrastructure that does not depend on behavioral observation.

This infrastructure must:

1. Verify identity through cryptographic selfhood rather than behavioral signals

Portable Identity provides self-sovereign cryptographic identity that individuals own through private keys. Institutions don’t grant identity. Platforms don’t control identity. Governments don’t issue identity. Individuals prove identity through cryptographic signatures that only they can generate.

2. Verify capability through causation rather than credentials

Cascade Proof provides verification of genuine capability through cryptographically-signed attestations from beneficiaries whose capability genuinely increased, with temporal persistence verification showing capability lasted months/years, independence verification proving capability functions without ongoing assistance, and propagation verification showing capability multiplied through teaching others.

3. Map contribution through verified cascades rather than behavioral metrics

Causation Graph aggregates all verified capability cascades into global network showing who enabled whom to develop what capability, revealing genuine contribution flows rather than behavioral proxies that AI can replicate.

This infrastructure must be:

- Deployed globally before 2027

- Adopted by major institutions (universities, corporations, governments, banks)

- Used as primary verification method rather than behavioral observation

- Open protocol rather than proprietary platform

- Cryptographically secured against forgery

- Privacy-preserving while verification-enabling

If this infrastructure exists by 2027, the verification collapse doesn’t happen. Institutions have backup verification when behavioral methods fail.

If this infrastructure doesn’t exist by 2027, we get the 72-hour scenario. Simultaneous verification failure. Institutional paralysis. Market collapse. Trust evaporation. Civilization without epistemology.

The Choice: Build Now or Break Later

We have seen this pattern before in human history:

Y2K: Systems built assuming dates would never exceed 1999. The assumption was reasonable when systems were designed. Became problematic as 2000 approached. Required massive infrastructure update to prevent collapse.

Difference: Y2K had a fixed, known deadline (January 1, 2000). Engineers knew exactly when the problem would manifest. Society had time to prepare.

2008 Financial Crisis: Systems built assuming housing prices would keep rising. The assumption was reasonable for decades. Became catastrophically wrong when housing prices fell. No alternative systems existed. Collapse was sudden and severe.

Difference: Housing price assumptions had no technological forcing function. The failure mode was economic rather than technological. Recovery was possible through monetary policy.

The 2027 Verification Collapse combines the worst of both:

Like Y2K: It has a forcing function (AI capability crossing threshold) and approximate timeline (2026-2027) that makes the problem predictable and preparation theoretically possible.

Like 2008: It involves trust infrastructure fundamental to civilization, failure cascades across all institutions simultaneously, and no recovery mechanism exists because the failure mode is epistemological rather than economic—you cannot print trust the way central banks printed money in 2008.

Unlike both: This collapse is permanent if alternative infrastructure doesn’t exist. Y2K was fixable because dates still worked after the millennium—just needed systems updated. 2008 was recoverable because economic fundamentals still existed—just needed liquidity restored.

But if verification infrastructure collapses completely and no alternative exists, there is no recovery path. You cannot restore behavioral verification once AI has perfect simulation capability. The information-theoretic properties of the situation make behavioral observation permanently useless for verification.

The only option is different verification architecture. And building that architecture takes time.

We have approximately 24 months.

Either we build Cascade Proof infrastructure before behavioral verification fails, or we experience 2027 as described above.

There is no middle option. Verification either works or it doesn’t. Institutions either have unfakeable verification methods or they have no verification methods.

And an civilization that cannot verify identity, capability, or causation is not a civilization. It’s a collection of isolated trust clusters that cannot coordinate at scale, cannot maintain institutions, cannot enforce contracts, cannot attribute value, cannot distribute resources, cannot govern democratically.

It’s pre-civilization epistemology with post-civilization technology. The worst possible combination.

The Declaration

We declare that the 2027 Verification Collapse is not hypothetical—it is the mathematically certain result of AI capability growth curves intersecting with verification system dependencies.

We declare that behavioral observation will fail completely as a verification method between late 2026 and early 2027, plus or minus six months depending on AI capability advancement rates.

We declare that no current backup systems exist to provide verification when behavioral methods fail.

We declare that building alternative verification infrastructure requires 18-24 months minimum for protocol development, institution adoption, and network effects to establish trust.

We declare that the window for preventing collapse is closing and closes completely by mid-2026 if infrastructure deployment must be complete before behavioral verification fails in late 2026.

We declare that Cascade Proof—verification through unfakeable causation patterns that only consciousness-to-consciousness interaction creates—is the only verification method that remains reliable when AI achieves perfect behavioral simulation.

We declare that humanity must choose:

Path A: Build Cascade Proof infrastructure now (2025), deploy through major institutions (2025-2026), have backup verification ready when behavioral methods fail (2027). Civilization continues with new verification architecture.

Path B: Delay infrastructure build, hope AI doesn’t reach simulation threshold as quickly as projected, experience verification collapse when hope proves insufficient. Civilization fragments into small trust networks. Modern scale coordination becomes impossible.

There is no Path C where behavioral verification keeps working or gradually degrades. The failure mode is binary: behavioral verification either works or provides zero information. There is no middle state.

The Call

If you run a university: Implement Cascade verification for student capability now. Before you cannot tell which students are real.

If you run a corporation: Implement Cascade verification for employee capability now. Before you cannot tell which employees are human.

If you run a bank: Implement causation verification for beneficial owners now. Before you cannot tell who controls the assets.

If you make policy: Mandate Cascade infrastructure deployment now. Before verification collapse makes governance impossible.

If you build technology: Contribute to Cascade Proof protocol development now. Before the alternative is civilization-scale coordination failure.

If you are a person: Build your Cascade Graph now. Before the only people who can prove they’re real are those who started early.

The 72-hour scenario above is 24-30 months away. It is preventable. Prevention requires action now.

Not study. Not planning. Not committee formation. Not pilot programs.

Deployment.

The infrastructure exists. The protocols are specified. The mathematics is proven. The implementation is straightforward.

What’s missing is recognition that this is urgent. That the collapse is real. That the timeline is now. That the alternative is civilizational epistemology failure.

This is not future speculation. This is present necessity described in future tense because that’s how humans process warnings about problems that haven’t manifested yet.

But the problem is here. The solution is available. The window is closing.

We have 24 months to build verification infrastructure that works when behavioral observation fails.

Or we get December 2027 exactly as described.

The choice is: Build now or break later.

There is no later than later. The deadline is the deadline.

What To Do This Quarter: The 90-Day Action Plan

If you run a university:

- Pilot Cascade verification with one department (start with CS or Engineering)

- Require Portable Identity for all incoming graduate students

- Begin tracking which teaching methods create lasting capability vs temporary performance

- Audit current verification methods—document what actually proves human vs synthetic

If you run a corporation:

- Implement Cascade Proof for one critical hiring pipeline (senior engineering, executive roles)

- Mandate Portable Identity for all new hires in verification-critical roles

- Audit employee verification methods—identify where you’re relying purely on behavioral signals

- Begin cascade tracking for internal capability development and mentorship

If you run a financial institution:

- Pilot causation verification for high-value beneficial owners

- Implement Portable Identity requirements for new account openings above threshold

- Review current KYC/AML processes—flag dependencies on behavioral verification

- Establish working group on synthetic identity risk in your customer base

If you make policy:

- Commission study on AI simulation capability timeline (don’t wait for consensus—act on trajectory)

- Draft Cascade infrastructure standards for public sector implementation

- Create inter-agency coordination on verification crisis preparedness

- Fund public Cascade Proof infrastructure deployment as critical national infrastructure

If you build systems:

- Implement Portable Identity support in your authentication infrastructure

- Build Cascade Proof verification into your platform’s trust mechanisms

- Contribute to open Cascade protocols—this must be interoperable, not fragmented

- Help one institution deploy Cascade infrastructure this quarter

Timeline: 90 days to pilot, 12 months to deploy, 24 months until failure.

Do not study this problem for two years. Deploy pilots now. Learn by doing. Iterate in production. The verification collapse doesn’t wait for perfect implementation.

Welcome to the last year of behavioral verification.

What we build now determines whether civilization maintains epistemology or loses it permanently.

Choose accordingly.

About This Framework

This scenario demonstrates the precise mechanism by which verification systems fail when AI achieves perfect behavioral simulation capability. The 72-hour timeline is not arbitrary—it represents the time required for simultaneous institutional discovery, cross-sector realization, and market response when verification failure becomes undeniable. The university/corporation/bank sequence shows verification collapse across education, employment, and finance—three pillars of modern institutional trust. The scenario is calibrated to late 2027 based on current AI capability growth curves, institutional verification dependencies, and the phase-transition nature of verification failure (works or doesn’t, no gradient). The alternative timeline demonstrates why Cascade Proof infrastructure must exist before behavioral verification fails—after failure, no recovery path exists because the failure mode is information-theoretic rather than operational. This is the scenario that motivates urgent deployment of causation verification architecture as civilization’s survival requirement in the age of perfect simulation.

For the protocol infrastructure that makes cascade verification possible:

cascadeproof.org

For the identity foundation that enables unfakeable proof:

portableidentity.global

Rights and Usage

All materials published under CascadeProof.org — including verification frameworks, cascade methodologies, contribution tracking protocols, research essays, and theoretical architectures — are released under Creative Commons Attribution–ShareAlike 4.0 International (CC BY-SA 4.0).

This license guarantees three permanent rights:

1. Right to Reproduce

Anyone may copy, quote, translate, or redistribute this material freely, with attribution to CascadeProof.org.

How to attribute:

- For articles/publications: ”Source: CascadeProof.org”

- For academic citations: ”CascadeProof.org (2025). [Title]. Retrieved from https://cascadeproof.org”

2. Right to Adapt

Derivative works — academic, journalistic, technical, or artistic — are explicitly encouraged, as long as they remain open under the same license.

Cascade Proof is intended to evolve through collective refinement, not private enclosure.

3. Right to Defend the Definition

Any party may publicly reference this framework, methodology, or license to prevent:

- private appropriation

- trademark capture

- paywalling of the term ”Cascade Proof”

- proprietary redefinition of verification protocols

- commercial capture of cascade verification standards

The license itself is a tool of collective defense.

No exclusive licenses will ever be granted. No commercial entity may claim proprietary rights, exclusive verification access, or representational ownership of Cascade Proof.

Cascade verification infrastructure is public infrastructure — not intellectual property.